Abstracts

Abstract

When a qualitative leap forward is taken in any scientific discipline, the change is usually accompanied by an increased interest in research. This occurred in Translation Studies in the 1950s and in the 1990s. This paper outlines some of the most important epistemological and methodological questions faced by researchers who want to apply the so called “scientific method” to empirical research in translation. We will discuss the main steps in the research process: designing an experiment, selecting subjects or the object of study, defining experimental and control groups, controlling independent variables, choosing instruments that will measure what we want to measure and which will give us reliable data to analyse. The whole procedure should be intelligible and transparent, the objectives relevant and the results clear.

Keywords:

- Translation Studies,

- methodology,

- scientific method,

- empirical studies,

- criteria,

- critique

Résumé

Dans tout domaine scientifique, lorsqu’un saut qualitatif se produit, il est généralement accompagné d´une véritable passion pour la recherche. C’est ce qui est advenu en traduction, tout d’abord dans les années 1950 et ensuite dans les années 1990. L’objectif de ce travail est d’examiner certaines des principales questions de méthodologie et d´épistémologie auxquelles se trouve confrontée la recherche empirique en traduction lorsqu’il s’agit d’appliquer ce qu’il est convenu d’appeler la « méthode scientifique ». Nous étudierons les points essentiels devant être pris en considération au cours du processus de recherche : conception de l’étude, sélection des individus et des sujets de la recherche, définition des groupes expérimentaux et de contrôle, contrôle des variables indépendantes et choix des instruments servant à mesurer les paramètres pertinents afin d’obtenir des données fiables. Une telle procédure devrait être caractérisée par l’intelligibilité et la transparence du processus scientifique, ainsi que par la pertinence des objectifs et la clarté des résultats.

Mots-clés :

- traductologie,

- méthodologie,

- méthode scientifique,

- études empiriques,

- critique

Article body

Introduction

When a scientific field undergoes a qualitative leap such as that which occurred in translation, firstly in the mid-1950s when translator training became a university discipline with the creation of translation faculties in Montreal, Leipzig and Paris, and later in the 1990s when other countries, for instance Spain, started undergraduate and doctorate programmes in translation and interpretation, this change is usually accompanied by a marked enthusiasm for research. There was a move in Translation Studies to appear more “scientific,” to obtain the “truly scientific status” postulated by Gideon Toury. From the middle of the 1980s onwards, Translation Studies began adopting the formalism and even the symbolism of the natural and social sciences[2] and appropriating the methodology and tools of these approaches. The argument was that however good theoretical principles were at explaining observable phenomena in a specific field, these constructs only acquired scientific and epistemic value if they could be operationalised; in other words, if they could be compared via a systematic observation or thorough examination in an experimental study. As Toury insists, empiricism “constitutes the subject matter of a proper discipline of Translation Studies [...] it involves [...] (observable and reconstructable) facts of real life rather than merely speculative entities from preconceived hypotheses and theoretical models” (1995, p. 1). Without wishing to belittle this tendency (which without a doubt has represented a great step forward in our research) we are beginning to notice a certain “empiricism for empiricism’s sake” within the field. A huge number of studies and experiments are being carried out into very isolated issues or issues of very little scientific relevance. As Chesterman put it: “trivial problem, no problem, irrelevant discussion” (1998).[3] Either experimental designs are being engaged in that have been badly set out (Chesterman himself criticised circular argument, illicit generalisations based on atypical cases, the confusion between correlation and causality, false induction, and so forth), or else, in many cases, there is a failure to define the general theoretical backgrounds against which results should be understood. We support the reflection made by Amparo Hurtado referring to research in our field: “We consider it urgent that the relevance of data in research across Translation Studies is established; that methods and tools should be chosen in accordance with the object under study and the study’s planned aims; that replication is encouraged, as well as contact between researchers” (2001, p. 199).

The aim of this article is to note, in a necessarily concise way, some of the main methodological issues that emerge when applying empirical research methods to translation. We look at the issues raised when closely examining the postulates that have defined the natural sciences and which have been integrated into the social sciences; in other words, the problems that arise when applying the “scientific method.” Additionally, there are some reflections on the implications this has on our research. We discuss the main steps to take into account in the research process when designing a study and we propose a research procedure shaped by the intelligibility and transparency of the scientific process, as well as by the relevance of its aims, its evidence and its results.[4] We believe it must also be one centred on the integrity of all research founded on rationalism and pragmatism.

1. The “Scientific Method”: A Phases Model

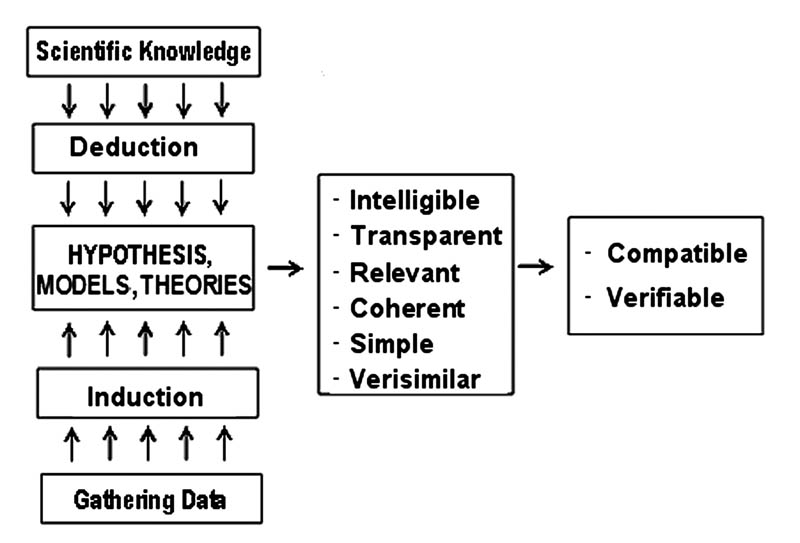

The “scientific method,” which is only one approach among many possible “scientific” approaches, is usually described through a phases model outlined in the accompanying illustration.

1.1. The Theoretical-Conceptual Level

On a conceptual level (when defining the problem and formulating testable theoretic hypotheses empirically and validating these hypotheses through the results obtained) despite inherent difficulties, in Translation Studies research there are still guidelines and procedures to follow in common with all scientific fields that have opted for an empirical approach to solving problems. When analysing empirical data and contrasting this with a starting hypothesis, Translation Studies can adapt and apply the well-defined tools used by the natural, social or human sciences. Research problems associated with each field arise, in particular, at a methodological level: when choosing an approach, designing and planning the research and when gathering data (especially designing instruments to measure and compile data) that can be used to validate our hypothesis; always respecting the accurate criteria associated with different approaches as defined by logical positivism.

Figure 1 : Research design, adapted from Portell, M. (2001)

1.2. The Methodological Level: Applying Accurate Criteria

Let us recall here, very briefly, the main criterion of “meticulous observation” demanded by the scientific method to illustrate the problems of applying this methodology to our scientific field.

Objectivity: The design of an experiment has to guarantee that the approach and tools used are independent of the researcher who will use them. In other words, that in the hypothetical case that the study were to be carried out by other researchers, then equivalent, or very similar, results would be obtained. The problem posed here is that the research (or lecturer in the case of didactic research) can manipulate (consciously or unconsciously) the stimulus and the results.[5] A solution to this could consist of standardising the instructions, interventions and the tools used for measurement in such a way that other researchers would get the same results, or, at the very least, ensure clarity, transparency, intelligibility, comprehensibility and logic in a methodological approach, so that other researchers would understand the procedures that led to the results in question.

Internal validity: This criterion refers to internal consistency in the approach. It demands that the design of the experiment guarantees control of all factors that could distort the results. In other words, all confusing variables (in our case these would be linguistic knowledge and general awareness of culture, previous experience, pedagogical input, time, and so on) and the accuracy of the instruments measuring them. A solution might consist of selecting the subjects and validating the instruments, as will be expanded on below.

Repeatability: The design must guarantee that the results obtained in a particular experiment can be repeated in parallel experiments with other subjects, which implies complete transparency when selecting the sample.

Reliability: Measures should be taken to ensure that results are reliable indicators for the objectives that you wish to attain. In other words, it is essential to ensure that you are indeed measuring what you set out to measure. This is a crucial requirement in an empirical approach to research. Problems can emerge not only when designing the instruments for measuring what we wish to measure, but also when operationalising constructs (e.g., “translator competence,” “privileged translation”) as well as when defining the environment (population, corpus of texts) from which the sample will be taken. A solution lies in justifying the relevance of the selected corpus or subjects.

Extrapolability: The experiment has to be designed in such a way that the results obtained can be extrapolated into other situations, or, at least, can serve as a basis for formulating a working hypothesis for later research. In the case of translation didactics for example, the only experiments that make any sense are those whose results are valid for many translation situations and which have a general relevance in the field of teaching, and by extension in translation theory.

Quantifiability: This criterion refers to the idea that the data obtained must be quantifiable (in other words, expressible in numbers). Many researchers erroneously believe that results which are not the fruit of a Chi-square test, a t-test, a Pearson-r test, or of an analysis of variances have no explanatory strength whatsoever. However, in our field, categorical or qualitative analyses can be equally appropriate if the precepts of Cartesian evidence are always borne in mind when interpreting the results.

Ecological validity or environmental validity: The experiment should reflect a real situation; it should represent the least artificial circumstances possible. It is obvious that this is the most serious problem for all laboratory experiments, since laboratories, by definition, are artificial. It is difficult to design a situation in which the subjects, for example, translators, are not influenced by the environment itself or by the mere fact that they know they are involved in an experiment. It is here where the tools of modern Translation Studies demonstrate their greatest weakness (TAPs, interviews, surveys, physiological measurements). One possible solution involves applying the old research trick of concealing the real aim of the experiments when presenting them to subjects or in their instructions.

In addition to the pre-requisites already mentioned, the design of the experiment must respect other criteria (what we could call “experimental pragmatics”), amongst which we would like to particularly note practicability or scientific economy, criteria described by Giegler (1988, pp. 785-786) demanding that experiments be designed in the simplest way possible to avoid overloading the subjects; as well as ensuring that they are manageable as a whole and that the analysis of the results does not imply excessive effort by the researchers.

1.3. The Aim of Translation Studies Research

When a science such as Translation Studies, whose research objectives do not involve a description and control of the world (as in natural sciences research), human behaviour (social sciences) nor an analysis of interpretation of real human intervention (historical, juridical or philological sciences), but rather represent a search for an ideal state (the potential results of a human intervention) then this will tend to lead to the development of that science’s own form of theoretical abstraction and a search for a new research path. The resulting research procedure should not only be focused on the accuracy postulated by the “scientific method,” but give prevalence to the practicality, transparency and relevance of the scientific process. It would appear obvious that in a field as complex as ours the same rules governing methodological accuracy (not to be confused with experimental integrity) cannot apply as they do in fields such as thermodynamics or biomedicine, for example.

2. Transparency in Translation Research Procedures

This chapter has subdivisions based on the key steps that define the empirical research process, as described, for example by Bunge (1972) or Neunzig and Tanqueiro (2007). The aim is to ensure the transparency of our conduct, in other words, to ensure the intelligibility of the procedure for scientists not connected with the project, or, as Umberto Eco (1977) postulated in his work about how to create a thesis, this should include all the elements necessary for the public to be able to follow it.

2.1. Justifying the Relevance of a Study

Karl Popper (1963) affirmed that the starting point for all scientific work is the problem and not the gathering of data. The eminent German pedagogues Tausch and Tausch (1991) insisted that the importance of a study derives from the relevance of the problem it deals with. So, the first step in any research should be enunciating well-formulated and credibly fertile questions and justifying interest in the topic, in short, stressing its relevance.

All research serves (or at least should serve) to enrich human knowledge in general; we may speak of this as intellectual relevance (or research of general interest). Research of general interest would include investigative efforts aimed at improving life or improving human coexistence. These are of great value for society (socially relevant) and would include certain research in the fields of medicine, epidemiology, sociology, psychology, and so forth, and in particular in the area of research into maintaining peace.

All scientific fields also need research (of scientific relevance) dedicated, essentially, to opening up new paths, evaluating new ideas and providing results (interesting facts from the point of view of a particular discipline). All of this is usually known as basic research. The problem here is twofold: on the one hand, every researcher (who receives finance for carrying out the project or who is interested in broadening, in a spectacular way, their curriculum of publications) will say that their work is of “the utmost relevance for this field” and that they are providing “previously unsuspected truths;” of course, nobody is interested in “trivial truths.” However, on the other hand, it can never be said that research which today appears “esoteric” will not turn out to be of great relevance one day (imagine, for a moment, research aimed at isolating the “translator gene”).

Economic relevance would appear to be the dominion of the technological sciences (concentrated in R&D), but in our area there are also projects that can be defined essentially for their economic relevance, such as automatic translation, lexicography or computer-aided translation tools. In close relationship with the economic importance of research are those studies aimed at making the “life” of professionals in the field easier, studies which we might say are of professional relevance.

Finally, there are scientific areas where research is also marked by pedagogical relevance. These are studies aimed at seeking the best way of transmitting knowledge acquired by one generation to the next. In our field, these studies acquire great importance, since the more complex the task is (not more difficult), the more knowledge, competences, abilities and strategies you need to update in order to achieve your objective: (one which, in the end, defines our profession) the vital task of finding (researching) the best way of transmitting knowledge from one generation to another.

2.2. Introducing the Referential Framework

Starting with a bibliographical analysis, the referential framework is established and the antecedents of the study are described. This framework helps us systemise the question posed and aids us when drawing up a model, as well as helps us to decide on our research focus. Umberto Eco (1977), in his advice on writing a doctoral thesis, recommends that sources should be practical, in other words, easily accessible for the PhD student and that they should be manageable, in other words, within the doctoral student’s cultural reach.

2.3. Formulating Well-Defined Hypotheses

A well-defined hypothesis is formulated as a statement describing a fact in the scientific field susceptible to being compared and contrasted in order to obtain data that confirms or rejects the hypothesis. Hypotheses structure the relationships between variables that can be observed using deductive or inductive methods.

2.3.1. Criteria

In empirical studies, hypotheses must respect the criteria set out by Karl Popper or Carl Gustav Hempel, amongst others.

Compatibility: This refers to the fact that hypotheses should be compatible with scientific knowledge, with previous objective knowledge.

Verifiability: This refers to the fact that they should be able to be verified, or rejected, in empirical studies that are not mere speculations, such as in the following example: “The translator gets under the skin of the author, guessing his chain of thought when that is not sufficiently explicit.”

Intelligibility: This means that other scientists can intellectually assimilate the reasoning used.

Verisimilitude: This means that they must be logical, no matter how verifiable or intelligible a hypothesis might be, such as: “The planets orbit around the sun with movements in step with Johann Strauss’s Blue Danube.” It makes no sense wasting money and time proving that.

Relevance: This means there should be an obvious point to the exercise; it should have some scientific or professional interest. We have no idea what hypothesis motivated doctors Alan Hirsch and Charles Wolf, of Chicago,[6] to study the growth of President Clinton’s nose when he was telling lies during the Lewinsky case. They reached the conclusion that his nose grew, even though the slight enlargement was not obvious at first sight. In our opinion, such a study has no scientific relevance whatsoever.

Figure 2 : Hypotheses in empirical studies

2.3.2. Three Levels of Hypotheses

Following the phase model, we draw up our hypothesis on three levels:

Theoretical hypotheses: These are presumptions or suppositions derived directly from an established theoretical model. They are formulated in a general way and cannot be directly verified using systematic observations (empirically), an example is the theoretical hypothesis of the Pacte group: “Translation competence is expert knowledge […] made up of a system of sub-competences that are interrelated” (2003, p. 48). There is no sense negating a theoretical hypothesis, the research would itself make no sense. Test, this statement cannot be negated: “TC is expert knowledge that is NOT made up of interrelated sub-competences.”

We decide on our research focus in relation to our theoretical hypothesis. We do not wish to discuss the scientific credentials of every approach here, all roads are equally “scientific,” but it is obvious that there are problems, even “paradigmatic” problems, when choosing between one focus or another. We either decide to do a non-empirical study,[7] in other words we go for a theoretical approach, or else we choose an empirical study within which we formulate our hypothesis.

Working hypotheses: These are deductions based on a theoretical hypothesis that are open to being validated by observation. They can be proven through multiple studies or experiments with the most varied instruments and designs, for example: “There exists a relationship of cause and effect between the degree of translation expertise and the identification of the problems of translation.” It should be possible to reject the working hypothesis trough an empirical study. Therefore, it should be possible to formulate the negation of this hypothesis: “There does NOT exist any relationship of cause and effect [...].” One danger is in formulating tautological hypotheses, such as: “There exists a cause and effect relationship between the degree of TC and the accuracy of the translation.”

Operational hypotheses: This refers to concrete studies aimed at confirming or rejecting the above, and, indirectly, the theoretical hypothesis and the model. They predict the result of the behaviour of the variables in a particular study and they are derived from the experimental approach.

2.4. Deciding the Research Focus and the Strategy for Gathering Data

Depending on the problem posed, if a researcher decides on an empirical focus, he or she will then opt for an empirical-observational investigation, or else will design an experiment. This decision will be dictated by the aims and objectives of the research. If we are planning, for example, a study within the field of literary translation, we can take an empirical-observational approach. However, in order to know how students will react when they receive a certain type of feedback from their lecturer, it would be logical to set up an exploratory experiment. Furthermore, in an investigation as wide ranging as that of the Pacte group (who are interested in clarifying what translation competence is and how it is acquired) it would be logical for this experimental study to follow the hypothetical-deductive method. Consequently, the strategy adopted for gathering data will also depend on the problem posed and the object under study.

Important researchers in our field have opted for case studies precisely because they maintain that the act of translation is so complex that research into a wide range of samples leads to researchers getting lost amidst too much information. In the end, it is not a case of knowing how “translators” translate, but rather learning how the great geniuses of our field do it. Paul Kuβmaul (1993) defends this approach in his studies into creativity and Helena Tanqueiro (2004) in her studies on self-translation within literary translation theory.

Within the field of Translation Studies, exploratory studies are more common. These attempt to verify if a conviction, or an idea, that a scholar has extracted from his or her own professional experience is verifiable in reality and observable on a more general level and whether the tendencies observed can be extrapolated to other similar cases; in other words, if there is any foundation for them. Often these are open approaches of the “What will happen if...” type that Daniel Gile (1998) called open experiments. He further argued that concentrating only “on what was being looked for” leads to a lot of data being overlooked (possibly the most interesting pieces).

There are branches of Translation Studies that, of necessity, must be based on directed observation, on gathering data without any type of intervention or manipulation (field research). These include literary translation, where research is based on existing and unalterable data that cannot be manipulated experimentally. The problem with this type of study, based essentially on analysing translations, is a tendency to “falsify” results, with researchers choosing only those sources and those examples that support their hypothesis. We believe that transparent research that opts for an observational approach must ensure two things (as well as, naturally, taking into account what has already been mentioned with regard to the rationality of theoretical approaches). On the one hand, it should ensure the operationality of theoretical principles through definitions that allow solid empirical verification, while on the other hand, it must justify the selection of subjects, or works, or sources of analysis (why those particular works or subjects were chosen) as well as the criteria governing the gathering of data (for example, the types of examples that will be taken into account or discarded).

In recent years, there has been an increase in the designing of laboratory experiments, in which experimental conditions are controlled, offering the possibility of eliminating confusing variables and manipulating those variables in which we are interested, while, in addition, offering more accurate measurement. The main problem here is the lack of environmental validity, i.e., the very artificiality of the situation in which data is obtained. Nevertheless, carrying out measurements in a natural environment (field work) where subjects perform in a natural context is rare in our research (except in didactic situations) because it requires a great deal of effort by the researcher.

2.5. Defining the Variables of a Study and its Indicators

Variables in experimental research, or in an observational study, can be defined as everything which, from a quantitative or qualitative point of view, we are going to measure, control or study; in short, everything that is in close relationship with our operational hypotheses, anything that influences a study. These include independent variables, those which can be selected or manipulated, and dependent variables, which reflect the result of an action by the independent variables and confusing variables (which should be controlled, as much as possible, so as not to distort any results obtained).

Independent variables are understood to have the capacity to influence, have a bearing on, or affect the phenomenon that we are observing. For example: “the degree of translation expertise has a bearing on the translation process and the final product.” Pacte (2008) had to define the variable “expertise” and decided to work with a variable dichotomy: “expertise(+)”: generalist translators, being those having six or more years of professional translation experience, with translation being their main activity (at least 70% of their income), and “expertise(-)”: foreign language teachers in the Spanish EOIs (Official Language Schools) having six or more years of experience, but without having any professional experience in translation.

Figure 3: Variables in experimental research

Dependent variables can be defined as the observable consequences of the manipulation, or selection, of an independent variable by the researcher. Finding one or more dependent variables that are valid for measuring what we really wish to measure, is of major importance in the design of a study. In order to make a variable measurable, it is crucial to start from the theoretical definition already drawn up and to define the proportions into which the variable can be broken down. These “proportions” which correspond to the theoretical concepts we are interested in empirical correlations are the indicators of the variables we are trying to measure.

Confusing variables are external influences that can distort the results obtained in the study (the influence of a confusing variable is often attributed erroneously to an independent variable). They should be eliminated or controlled when designing the study. Control of confusing variables is a huge problem in literary Translation Studies. This feature of Translation Studies research can be seen through one of the characteristic dilemmas of that research, determining which translations form part of a corpus to be analysed (the entry of unqualified people into the profession has meant that translations have been published by “translators” who do not have even basic linguistic competence). Interest in the study of self-translations revolves around the fact that we are able to eliminate these undesired variables. Self-translators do not misinterpret themselves; they have sound bilingual and bicultural competence. In experimental studies, control can be carried out in different ways: through the elimination of an undesirable variable (e.g., if the study deals with inverse translation, then students with German as their mother tongue are excluded from it), or by maintaining their influence constant (forming groups that are truly “parallel”). In tracking studies (e.g., in didactic research) it is very difficult to control external factors (length of stay in a foreign country—infatuations included—work experience placements or work, and so on).

2.6. Defining the “Universe” of the Study and Extracting a Sample

This implies determining who (or what) we wish to observe. The universe (the “population” or the “collective”) is a set of reference elements—whether subjects (e.g., professional translators) or objects, (e.g., self-translated works)—that are subject to observations. It is defined by a distinctive common characteristic, which is what is studied. However, we find that in our field, there are no external criteria for defining and delimiting the references of this universe, e.g., “expert translators.” The solution to this problem is a pragmatic decision, i.e., the Pacte decision mentioned earlier.

Defining the universe (and with it the drawing up of a sample) is vital when it comes to interpretation (always subjective) and extrapolation (only valid within a defined universe) of data gathered. As it is not possible to observe the entire population (the “universe”) that we are interested in analysing, a representative sample of the universe we wish to analyse is taken. The most common way of obtaining such a sample is by random selection, which, for obvious reasons, is not very common in our field. It is also not very common to find sampling through quotas (selecting in accordance with certain percentages of the population, for example, male or female translators, translators of different ages). Here we are in full agreement with Daniel Gile when he warned us of the difficulty of selecting random samples and proposed “convenience” sampling: “An acceptable approximation can sometimes be found in the form of critically controlled convenience sampling, in which subjects are selected because they are easy to access but are screened on the basis of the researcher’s knowledge of the field and (ideally) of empirical data derived from observation and experimentation” (1998, p. 77).

In order to create “parallel” samples (experimental groups and control groups), the selected subjects are divided, normally at random. However, choosing at random can play havoc when we are dealing with a small sample (which is what Translation Studies normally does). For this reason, we can consider pre-tests which allow us to draw up “matching samples.” In other words, the sample is divided into “pairs” (or into “trios,” or into “blocks”) all with similar behaviour. Then one member of each “pair” is assigned to one group, and the other to a second group (and so on, in turn). There are statistical tests that allow us to determine if two groups are really parallel.

2.7. Determining the Tools for Gathering Data

In the unlikely event that we were to believe that translation competence is essentially hereditary, our investigation would concentrate on isolating the “translation gene” and we would then bring into play all of the tools of genetic research. If, on the other hand, we suspected that this competence depended on the character of the professional, we would turn to one of the standard personality tests available to us in the field of psychology. However, as we are convinced that TC is expert knowledge resulting from the interrelationship between different sub-competences and we do not have at our disposition standardised instruments, we try to adopt our ends to the tools of other sciences, or, when this is not possible, create our own tools and validate them empirically.

To ensure the objectivity, reliability, extrapolability and the environmental validity of the empirical approach and, especially, the relevance of the results obtained, it is of vital importance to have effective instruments for gathering data and measuring it accurately. One of the main problems is the lack of previous experience along with the above-mentioned lack of standardised tools. That is why we usually limit ourselves, essentially, to using tools that could be called “classic” tools (translations, questionnaires and interviews) and, more recently, TAPs. In recent years, Translation Studies research (especially research in interpreting) has utilised physiological and psychological indicators (for example, memory tests and autonomic nervous system responses) to clarify the translation process. Even more recently, computer and communications technology has been used (monitoring and recording programs such as Translog or Proxy, eye-tracking and others).

The choice of texts to be translated is complicated by the fact we need comparable texts for studies involving repeated measurement (e.g., longitudinal studies) or for studies that include more than one language. A possible solution is to use a single text which is then divided into separate sections. This method was used in Neunzig and Tanqueiro (2005) and ensured that the “texts” (i.e., the various sections of a single text) were very similar: they were written by the same author, concerned the same subject matter, employed the same style and register. Another method for assuring comparable texts is to use tools from psycho-sociology, such as Charles Osgood’s Semantic Differential adapted for our field (see Neunzig, 2004). Pacte solved the problem of finding parallel texts in different languages in the following way: texts were sought in German, French and English on the same subject (computer viruses) with similar difficulties (based on “rich points”) and submitted to validation through an experiment. One of the questions the subjects were asked was as follows: What degree of difficulty would you estimate for this text? In response, translators had to mark an X at a certain point along a line that went from “this translation is very easy” to “this translation is very difficult.” An “index of difficulty” was calculated with the results shown in the table.

Table 1

Index of difficulty

The most obvious result of this validation of the comparability of the parallel texts was the homogeneity of the translators’ estimation of the difficulty of the three texts proposed for direct translation. In other words, the target language did not influence the subjects’ perception of the difficulty of the original text. This provides an indication (evidence) that both texts and subjects were well selected (see Pacte, 2008).

The use of questionnaires demonstrates the difficulty of formulating questions in such a way that everyone understands them in the same way (that they will truly measure what we have set out to measure). This implies a great additional effort by researchers, since most of our questionnaires have to be drawn up and validated especially for the specific project undertaken, i.e., the work carried out by Neunzig and Kuznik (2007) for Pacte.

Then there is the think aloud method (while translating), better known by its abbreviation TAP. This has proven efficient in researching what happens within the mind of the translator, within the so-called Black Box. However, the method’s detractors criticise the artificiality of the situation (translators rarely work facing a video camera and explaining what they are thinking). These critics note the difficulty some people have in verbalising what they are thinking and they maintain that TAPs give no access to automatic processes. In our opinion, of more value is the reflection made by the Copenhagen group (see Hansen et al., 1998) which concludes that one of the main problems of using TAPs is the difficulty of carrying out two similar activities simultaneously, such as translating and verbalising thoughts.

Immediate retrospection (retrospective TAPs) is intended to clarify what the subject has been thinking during the translation process. It has the advantage of not interfering with the translation process, and yet the process is still fresh in the translator’s mind. However, it does present serious problems of objectivity and validity, since what is being measured may be something you have not set out to measure, such as, the subject’s memory, or his or her capacity to adapt to the researcher’s expectations.

In order to avoid these difficulties, some researchers opt to formalise the conversations (TAP dialogue) with two or three subjects carrying out a joint translation. In this way, translators make more proposals, present more arguments, reports, criticisms, seek support, and so forth. However, even one of the strongest defenders of this tool, Paul Kußmaul (1993), notes that it may be just registering interesting data about the psychodynamic process rather than the translation process.

2.8. Gathering Data

A good piece of advice for data collection is to limit the data to what is relevant for our study, especially when dealing with academic work such as written papers, essays or doctoral theses. Those who say “I’ll take a note of this data about so-and-so; it could come in handy” contravene the principle of practicality and scientific economy as described by Giegler (1988). As mentioned above, he insisted that experiments be designed in the simplest way possible to avoid overloading subjects, as well as to ensure manageability and that the analysis of the results does not imply excessive effort on the part of the researchers.

2.9. Carrying Out a Statistical Analysis of the Data

Statistics puts at our service procedures and techniques that allow us to describe and analyse any data obtained. We use descriptive statistical methods in the hope that they will coincide with the basic characteristics of the “population” (the “universe” from which the sample has been taken) and inferential statistical methods, which are those based on calculations of probabilities and which attempt to extend or extrapolate out to the entire population the information obtained from a representative sample.

2.10. Interpreting the Results and Communicating Them to the Scientific Community

The last step in our scientific work is to compare the results with our hypothesis in order to corroborate our idea or reject it. The latter would oblige us to modify our hypothesis or theory, or our model. This modification would in turn be validated in an empirical process, bringing full circle the “wheel of science.” The following references are to publications that I have been involved in where we have tried to apply the principles of transparency in translation research procedures presented in this article.

Summary

To summarise the contents of this article, we offer the following illustration, a synthesis of the steps required to achieve transparency in translation research procedures.

Figure 4: Transparency in translation research procedures

Appendices

Notes

-

[1]

Pour cet article, l’auteur a reçu le Prix Vinay et Darbelnet, décerné par l’ACT (N.D.L.R.).

-

[2]

We must not forget that in the Nomenclator of UNESCO, our science is currently wedded to the social sciences.

-

[3]

Handout presented by Andrew Chesterman at a workshop on methodology at the EST Congress in Granada, 23-26 September 1998.

-

[4]

Let us remind ourselves of the first rule of Descartes’ method, the precept of evidence: “I would not accept anything as true which I did not clearly know to be true. That is to say, I would carefully avoid being over hasty or prejudiced, and I would understand nothing by my judgments beyond what presented itself so clearly and distinctly to my mind that I had no occasion to doubt it” (1633).

-

[5]

Max Weber (1922), the father of sociology, postulated that research should be wertfrei, that is, free of value judgements; a value judgement cannot be objective!

-

[6]

See El País (1999).

-

[7]

The deductive-axiomatic approach leads to the danger of becoming mere “speculationism,” in other words, of axiomatically starting from a speculation that is understood to be “true.” As Tausch and Tausch criticised: “Quoting established authorities a thousand times, whether they are called Pestalozzi, Freud or Skinner, does not convert speculation into science” (1971, p. 35).

Bibliographie

- Bunge, Mario (1972). La investigación científica. Su estructura y su filosofía. [Scientific Research. Its Structure and Philosophy.] Barcelona, Ariel.

- CHESTERMAN, Andrew (1998). “Handout.” Workshop on methodology, EST Congress, Granada, 23-26 September.

- DESCARTES, René (1633). Discourse on Method. Available at: http://records.viu.ca~johnstoidescartesdescvartes1.htm [consulted 10 July 2010].

- ECO, Umberto (1997). Como se hace una tesis: técnicas y procedimientos de investigación, estudio y escritura. [How to Write a Thesis.] Barcelona, Gedisa.

- EL PAÍS (1999). “Dos médicos confirman que la nariz crece al decir mentiras.” [“Two Doctors Confirm That Noses Really Do Grow When We Lie.”] Available at: http://elpais.comdiario19990523sociedad927410408_850215.html [consulted 6 May 2012].

- GIEGLER, Helmut (1994). “Test und Testtheorie.” [“Test and Test Theory.”] In Roland Asanger and Gerd Wenninger, eds. Wörterbuch der Psychologie. [Dictionary of Psychology.] Weinheim, Psychologie Verlags Union, pp. 782-789.

- Gile, Daniel (1998). “Observational Studies and Experimental Studies in the Investigation of Conference Interpreting.” Target, 10, 1, pp. 69-93.

- Hansen, Gyde (1998). “The Translation Process: From Source Text to Target Text.” In Gyde Hansen et al., eds. Copenhagen Working Papers in LSP, 1-1998, pp. 59-71.

- Hurtado Albir, Amparo (2001). Traducción y Traductología. [Translation and Translation Studies.] Madrid, Cátedra.

- KUßMAUL, Paul (1993). “Empirische Grundlagen einer Übersetzungsdidaktik: Kreativität im Übersetzungsprozeß.” [“Empirical Bases for Translation Didactics: Creativity in the Translation Process.”] In Justa Holz-Mänttäri and Christiane Nord, eds. Traducere navem: Festschrift für Katharina Reiss zum 70. Geburtstag. [Traducere navem: A Book in Homage to Katharina Reiss on Her 70th Birthday.] Tampere, Tampereen Yliopisto, pp. 335-350.

- Neunzig, Wilhelm (2004). “Das Semantische Differential zur Erfassung der Textrezeption.” [“The Semantic Differential in Measuring the Reception of a Text.”] In Eberhardt Fleischman, Peter A. Schmitt and Gerd Wotjak, eds. Translationskompetenz [Translation Competence.] Tübingen, Stauffenburg, pp. 159-173.

- Neunzig, Wilhelm and Anna Kuznik (2007). “Probleme der übersetzungswissenschaftlichen Forschung: zur Entwicklung eines Einstellungsfragebogens.” [“Problems in Translation-Based Research: Developing a Questionnaire on Translation Knowledge.”] In Peter A. Scvhmitt and Heike E. Jüngst, eds. Translationsqualität. [Translation Quality.] Frankfurt, Peter Lang Verlag, pp. 445-456.

- NEUNZIG, Wilhelm and Helena Tanqueiro (2005). “Teacher Feedback in Online Education for Trainee Translators.” Meta, 50, 4. CD-ROM.

- NEUNZIG, Wilhelm and Helena Tanqueiro (2007). Estudios empíricos en traducción: enfoques y métodos. [Empirical Studies in Translation: Approaches and Methods.] Girona, Documenta Universitaria.

- NEUNZIG, Wilhelm and Helena Tanqueiro (2011). “Zur Anwendung der naturwissenschaftlichen Methode in der Translationsforschung.” [“The Application of the ‘Scientific Method’ in Translation Research.”] In Peter A, Schmitt, Susann Herold and Annette Weilandt, eds. Translationsforschung. [Translation Research.] Frankfurt, Peter Lang Europäischer Verlag der Wissenschaften, pp. 599-614.

- PACTE (2003). “Building a Translation Competence Model.” In Alves, Fabio, ed. Triangulating Translation: Perspectives in Process Oriented Research. Amsterdam, John Benjamins, pp. 43-66.

- PACTE (2008). “First Results of a Translation Competence Experiment: ‘Knowledge of Translation’ and ‘Efficacy of the Translation Process.’” In John Kearns, ed. Translator and Interpreter Training. Issues, Methods and Debates. London, Continuum, pp. 104-126.

- POPPER, Karl (1963). Vermutungen und Widerlegungen. [Conjectures and Refutations.] Mohr Siebeck Verlag, Tübingen.

- PORTELL, Mariona (2001). “Handout.” Seminari on Methodology, Universitat Autònoma de Barcelona, 23-25 April.

- Tanqueiro, Helena (2004). “A Pesquisa em Tradução Litéraria—Proposta Metodológica.” [“Research in Literary Translation—A Methodological Approach.”] Polissema, 4, pp. 29-40.

- Tausch, Reinhard and Anne-Marie Tausch (1991). Erziehungspsychologie. Begegnung von Person zu Person [Educational Psychology. A Face to Face Encounter.] Göttingen, Hogrefe.

- Toury, Gideon (1995). Descriptive Translation Studies and Beyond. Amsterdam, John Benjamins Publishing.

- WEBER, Max (1922). “Der Sinn der ‘Wertfreiheit’ der soziologischen und ökonomischen Wissenschaften.” [“The Significance of Impartiality in the Sociological and Economic Sciences.”] In Max Weber, ed. Gesammelte Aufsätze zur Wissenschaftslehre [Essays in the Philosophy of Science.] Mohr Siebeck Verlag, Tübingen, pp. 489-540.

List of figures

Figure 1 : Research design, adapted from Portell, M. (2001)

Figure 2 : Hypotheses in empirical studies

Figure 3: Variables in experimental research

Table 1

Index of difficulty

Figure 4: Transparency in translation research procedures