Abstracts

Abstract

Information is central to modern economics, but mostly treated in a disembodied form. The paper suggests a semiotic approach to information that interprets information in terms of thermodynamic information theory. This builds on C. S. Peirce’s concept of finality, relating final causes to energetic transformations in the economy, which operate in the efficient-causal mode. I substantiate this argument in a semiotic analysis of design as mediating such transformations via technology.

Keywords:

- Semantic Information,

- Thermodynamics,

- Peirce,

- Final Causes,

- Technological Design

Résumé

L’information est au coeur de l’économie moderne, mais elle est le plus souvent traitée sous une forme désincarnée. L’auteur propose une approche sémiotique qui interprète l’information à l’aide de la théorie thermodynamique. Cette approche s’appuie sur le concept de finalité de C. S. Peirce, qui relie les causes finales aux transformations énergétiques dans l’économie, lesquelles fonctionnent sur le mode efficacité-causalité. Cet argument est étayé par une analyse sémiotique du design comme médiateur de ces transformations par la technologie.

Mots-clés :

- Information sémantique,

- thermodynamique,

- Peirce,

- causes finales,

- design technologique

Article body

1. Introduction : Information, the Ghost in the Economy

Since Nicholas Georgescu-Roegen (1971) published his work on the entropy law and economics, many authors have pursued the project to put economics and physics on a common ground, though mostly not academic economists (e.g. Kümmel 2013, a physicist). The core idea is that energetic throughputs drive economic growth : much effort has been spent on empirically vindicating this causal relationship (overview in Herrmann-Pillath 2014). This view has mostly been received in Ecological Economics, reflecting Georgescu-Roegen’s original approach, whereas standard economics treats energy just as one of many inputs into production, and not as a causal driver of growth. Georgescu-Roegen’s concern was that the relentless growth of energetic throughputs will result in ecological disaster since the Earth system is conceived as physically closed in the material sense, such that wastes will accumulate that eventually will destabilize the biosphere. As CO2 emissions are a kind of waste, the current climate crisis could be interpreted as vindicating his view.

However, many critics have pointed out that this economic application of thermodynamics is biased and partly misleading since the planet is an open, dynamic and non-linear complex system fed by continuous inflows of solar energy which, for the human economy, come close to being a free good, barring costs of transformation into useful work (for example, Kåberger & Månsson 2001). Accordingly, we could envisage engineering the human economy in a way that contains negative ecological side effects, such as, sustaining a circular economy with complete recycling by solar energy flows which would minimize negative externalities while maintaining the energy-growth causal link. A circular economy might grow forever, mimicking the biosphere in terms of thermodynamics, and exporting the entropy generated to the outer space via radiation (Kleidon 2016). In sum, there are two powerful arguments questioning doomsdays scenarios of thermodynamic approaches to economics, which both rely on human inventiveness and technological creativity : one is cutting the link between energy and growth in drastically lowering energy intensity of growth (‘decoupling’), the other is to even intensify energetic flows, however, in a radically redesigned technological infrastructure of the economy.

In these approaches, knowledge ‘falls from heaven’, just as in standard neoclassical theories of growth. Indeed, the visions of a ‘dematerialized economy’ explicitly presuppose that human knowledge generation and information processing is disembodied. Henceforth, I will just speak of ‘information’ for reasons of terminological simplicity since necessary conceptual distinctions (such as treating knowledge as a stock and information as a flow adding to that stock) do not matter for the argument that I present. I want to explore the question whether and how we can approach information from a thermodynamic viewpoint, that is, in the economic context, adopt a physical or embodied view on the role of information aka new knowledge in economic growth (on the fundamental issues, see Maroney 2009). In the context of the energy-growth topic, this is today partly recognized in the debates about the role of information technology in energy consumption (International Energy Agency 2020). However, this is just one manifestation of the more fundamental fact that information is physical, and partly obfuscates the real issues in just claiming that first, there are technological solutions to reducing the energetic intensity of computation, and secondly, that IT will help to reduce the energy intensity of all other technologies. In other words, while recognizing the energetic aspects of information in the digital technologies, their technological evolution is still viewed as being driven by disembodied flows of information aka new knowledge.

Neglecting the physical nature of information is deeply entrenched even in the literature on energy and growth, which is informed by thermodynamics. Georgescu-Roegen put the theory on a wrong track in exclusively focusing on classical thermodynamics and explicitly declaring statistical thermodynamics as irrelevant, even attacking Boltzmann, who had provided the theoretical basis for connecting entropy and information. This, however, led him into the trap of treating the planet as a closed system, hence drawing false conclusions on the allegedly inexorable working of the Second Law. This kind of false intuitive reference to thermodynamics still influences popular ideas about limits of growth and the need to launch ‘de-growth’, which are notoriously debunked by protagonists of innovation and technological progress, both in scholarship and in politics.

Accordingly, there is the urgent need to define a concise physical approach to information in economics. This paper sketches a possible conceptual framework that is grounded in the semiotics of Charles S. Peirce. The point of departure is that existing approaches to the physics of information as applied in the context of ‘classical’ worlds employ the Shannon concept of information, leaving quantum physics aside, where a complementary physics of information is well developed. (For a perspective accessible to the general reader, see Lloyd 2006.) This ignores the semantic and pragmatic aspects of information, which, obviously, matter most when considering technology and economic processes. After all, these are physically scaffolded human actions.

Extending semiotics into the sciences has been a project most rigorously pursued in the field of biosemiotics, which has also inspired explorations into physics, most notably, in the prolific writings by Stanley Salthe and co-authors (Salthe 1993; Salthe 2007, 2009; Annila & Salthe 2010; Herrmann-Pillath & Salthe 2011). To make the argument accessible to a cross-disciplinary readership, I avoid formal reasoning and argue in a principled way, taking a most famous parable as a pivot, Maxwell’s demon. The purpose is to show how energy, entropy and information relate to each other in technological evolution. (For a comprehensive treatment, see Herrmann-Pillath 2013). I interpret Maxwell’s demon in a new way, just by assuming that the demon’s goal is to generate work to sustain its operations. Therefore, I can approach the parable both in the sense of representing what Stuart Kauffman has introduced as the concept of ‘autonomous agents’ and of representing a general concept of ‘technology’. In section 2, I critically review the existing approaches to physical information in economics, which exclusively focus on Shannon information as a quantitative measure of complexity. In section 3, I introduce my new view grounded in Peirce, which I synthesize with recent advances in Maximum Entropy theory in the Earth System sciences to develop a general frame linking energy to information. In section 4, I employ this framework on technological evolution as a driving factor in economic growth. Section 5 concludes with pondering the implications for ecological design of the economy.

2. The Cul-de-Sac : Shannon Information as a Measure of Economic Complexity

The simple reason why economics has so far ignored the physical nature of information is that this is an extremely difficult topic. Yet, the difficulty is not its complexity, but the necessarily radical shift in our basic conceptions of the world. That is, the issue is philosophically difficult, but not, say, mathematically challenging, although this might be the case once proceeding on newly prepared philosophical grounds.

The single most important contribution to the question in the economic context was Robert Ayres’s (1994) book entitled Information, Entropy and Progress in which he already explored necessary conceptual shifts from a physicist’s angle. Yet later, even he only focused on the classical thermodynamics perspectives in investigating the role of energy in growth, sidelining the issue of information (e.g. Ayres & Warr 2009). Still, the 1994 book already opened the vista on almost all necessary conceptual innovations to define a physical notion of information. Where was the blockade that stopped further progress?

Seminally, Ayres already proposed a conceptual dualism consisting of two kinds of information. One is the standard Shannon notion of information, which is formally homologous to the Boltzmann definition of entropy, thus building the backbone for common syntheses between thermodynamics and information theory (Volkenstein 2009). Ayres defines this as ‘D-information’. The “D-” of this term means ‘distinguishability’, which can be related to more general notions of complexity, as in the common algorithmic definition of complexity as ‘algorithmic incompressibility’ (Zurek 1989). The notion of distinguishability is also fundamental to the statistical and the classical concepts of entropy. The latter refers to the distinguishability of a system from its environment in terms of thermodynamic gradients (e.g. temperature difference), which, most significantly, led Gibbs to define a measure for ‘essergy’, i.e., available useful work. The topic connects the 1994 book to Ayres’ more recent work. This establishes a direct relation to the aggregate quantity of information in the Boltzmann sense embodied in a physical system that is thermodynamically distinct from the environment.

Now, Ayres also introduced an entirely different notion of information, which he labelled ‘survival-relevant information’ SR-information. Having my own upcoming argument in mind, I pinpoint the essential difference to D-Information. D-information is physical information independent of an observer. It is hence a quantifiable extensive concept, whereas SR-information is information generated by evolutionary processes, which makes it, in a very general sense, dependent on an observer (– an ‘interpretant’ in Peircean terms, see below). Ayres does not systematically refer to evolution here, but the concept of ‘survival’ already establishes this connection, which he also elaborates. Just take a DNA molecule. We can analyse this in terms of D-Information with reference to its structural complexity and distinguishability from other physical entities. Obviously, however, something essential would be missing. DNA embodies SR-Information, which may come along in two variants, useful and harmful. Clearly, this information is also ‘physical’, but in which sense? It is so in the sense of being a recipe for making something, that is, not simply as causing organismic development, but as being interpreted by the machinery that assembles the organism by ‘reading’ the recipe (Oyama 2001).

Although Ayres recognizes the same, he was stuck in further exploring the role of D-information by synthesizing thermodynamics and economics. The book continues with unfolding a truly ‘grand picture’ of evolution across various levels, cosmological, Earth system, biosphere, and human society, but rarely with any systematic reference to SR-information. Nevertheless, it argues that evolution maximizes ‘intelligence’ in terms of useful SR-information, weeding out harmful information in the stock of accumulating embodied knowledge. This implies a directionality of evolution. Ayres critically reflects on some hypotheses about directionality, most significantly, Lotka’s (1922a,b) statement concerning the evolutionary extension of the laws of thermodynamics, which can be regarded as the precursor of many related hypotheses of more recent vintage, such as Bejan’s ‘Constructal law’ (Bejan & Lorente 2010), which is certainly crucial for solving our problem. Lotka had argued that natural selection creates the physical tendency of increasing both the embodied energy in the biosphere (biomass) and the flux of free energy. This amounts to stating that evolution is governed by a principle analogous to the Second Law, i.e., a tendency towards maximizing entropy exported to the environment, while achieving larger structural complexity reducing entropy of the corresponding bounded states. Ayres does no more pursue the obvious possibility that such a tendency may relate to the growth of embodied information since he does not follow Lotka in his claims for universality. However, recent studies have shown that Lotka’s theorem can be theoretically vindicated for the general case of open non-linear non-equilibrium systems (e.g., Sciubba 2011). This has been deployed seminally on Odum’s (2007) comprehensive approach to ecology and economy. As we shall see, Lotka plays a pivotal role in recent advances in non-equilibrium thermodynamics of the biosphere as well as in Earth system dynamics.

Consequently, from this point onwards, Ayres treats information only in terms of complexity and diversity. This is a general phenomenon in most of the approaches relating economics and physics, as in the celebrated Santa Fé Institute tradition. The economy is seen as a complex system, and complexity is conceived in terms of what Ayres had labelled ‘D-Information’. In the context of developing a mathematical progress function for the economy, Ayres undoubtedly speculates that we could equate an economic measure of progress that refers to accumulated useful information and can be related to the thermodynamic distinguishability of an economic system or of an economic good from its environment. However, he continues to introduce a more intuitive concept of information that distinguishes between thermodynamic information (chemical), morphological information (shape, structure etc.), control information (cybernetic), and symbolic information (communication, information, knowledge), eventually focussing on morphological information. Indeed, Ayres even intuitively subscribes to disembodying knowledge in treating it as a substitute of resources, as hard-nosed neoclassical theorists mostly do.

Morphological information relates to thermodynamic information in the sense that the latter is a potential for useful work. Useful work is necessary to enhance the morphological information of a product. Raw materials are processed and transformed into highly structured goods, and this requires energy enabling work. However, this is a very general observation that does not relate the precise kind work to the form of product, and it does not consider how far the product subsequently embodies a capacity for work that grounds in embodied information. This is exactly where the notion of SR-information would come into play.

The reduction of the notion of information to a quantitative notion of complexity is also characteristic for more recent, still rare, attempts at linking thermodynamics to economics via information. A case in point is the celebrated book by another physicist, Hidalgo (2016), Why Information Grows. As in Ayres’s case, there is a deep gap between the discussion of thermodynamics in the first part of the book and the subsequent analysis of knowledge and information in the economy. Hidalgo makes the important point that the accumulation of information in non-linear non-equilibrium systems occurs with physical necessity. According to the author, this implies that physical systems factually enact computations. However, this remains a mere metaphorical use of the concept. Having introduced the notion of embodiment of information in matter, Hidalgo jumps to the topic of complexity in terms of what Ayres calls ‘D-Information’. This is applied on both the level of single goods and on the aggregate level, such as analysing the complexity of the goods space of country exports as reflecting the underlying knowledge embodied in their production hardware and the human actors (skills, human capital). Hence, Hidalgo does not go beyond Ayres in theoretical terms. Furthermore, he simply ignores him, by not including Ayres in his list of references.

To summarize, the major problem in the state of the art is that physical notions of information remain almost exclusively informed by the Shannon concept of information, which is bolstered by the formal homology with entropy as defined by Boltzmann. What is entirely missing is a physical approach to what Ayres labelled as ‘SR-information’. Sketching a possible solution is the task of the next section.

3. A Semiotic Approach to the Physics of Information

3.1. Conceptual Limitations of Shannon Information

In this section, I will posit the general problem and will argue that philosophically, the adequate framework has been established by Charles S. Peirce and his theory of signs, as extracted from his voluminous writings in modern syntheses, especially Short (2007) whose study on Peirce’s Theory of Signs I follow closely. It is necessary to tackle a fundamental question even deeper than physics, which is metaphysics in Peirce’s understanding. This is the question of causation (for an extensive Peircean approach, see Hulswit 2002). Obviously, I cannot adequately cover even the smallest part of the relevant philosophical and scientific literature here, so, I just introduce my own view and substantiate this with Peirce’s approach, the latter in terms of modern versions, especially in modern biosemiotics. My discussion has much in common with Terence Deacon’s (2013) views on a radical alternative of what ‘physical’ means and focuses on the role of final causation in a physical concept of information.

Boltzmann entropy as information is ‘physical’ because it refers to the physical state space, in terms of positions and momentum of atoms of a gas in a container. In contrast, Shannon information, though formally homologous, is non-physical because the state space is arbitrary. That reflects its origin as a physical theory of message transmission across channels : the state space relates to the medium in which a message is produced (such as the letters of the alphabet, given linguistic constraints on composition). In contrast to the physical state space, the state space of constituent symbols used in messages is arbitrary and relates to the sender and receiver of the message. Hence, it introduces a conceptual frame that is utterly strange to standard physics, namely, the intentions of the sender and receiver of a message. However, this also applies when we consider technological artefacts, as in Ayres’s conception of morphological information. Its quantification in terms of bits depends on how we define the state space of the technological objects, which is entirely independent from the physical state space of the atoms and molecules that make up the artefact. For example, the embodied D-information of a screw depends on how many variants of screws we consider as possible forms or on how we further break down properties of screws such as length, windings, and so on, but not on the properties of the molecules that make up the screw.

Now, the important question is, “What determines this specific shape and structure of the state space?” These are the possible ways of using a screw : we would regard any kind of non-functional property of a screw as irrelevant to defining the state space, as this does not embody useful information. In other words, in producing screws we would focus on functional properties and leave all other properties open to variation without spending any effort on controlling them. Ultimately, these uses refer to human intentions. The screw is a message sent from the producer to the user of the screw, and the meaning of the screw consists in the ways it can be used, i.e., in its function. This example clearly demonstrates that we cannot rigorously separate D-information and SR-information when dealing with state spaces that are not determined by physical theory. That is why Shannon information is a radically incomplete theory of information (Brier 2008). It reveals the serious limitation of Shannon’s information in exploring the physics of information in the economy. Ayres had rightly stated that, in evolution, this refers to the ultimate evolutionary criterion of ‘selection for’ : Screws have been selected for fitting into nuts. The upshot is : what Ayres has labelled as SR-information is functional information emerging from selection among what is a random assemblage of physical features. This points to approaching information in a physical theory of evolution, as envisaged, but not elaborated, by Ayres (cf., e.g., Chaisson 2001).

3.2. The Role of Finality in the Theory of Information : Rethinking Maxwell’s Demon

In biosemiotics, it has been argued that any approach to biological functions must extend the standard conception of causality in terms of the richer Aristotelian taxonomy, which includes formal and final causes (Emmeche 2002). For most scientists, this is a no-go, but the point is immediately obvious in the example of the heart. We can analyse the mechanisms of the heart in terms of efficient causes, but that would not give us any glimpse of what the heart is really doing and why. The ‘why’ question requires reference to proximate and ultimate causes, which are forms of final causes, answering to ‘what for’ questions (for a comprehensive discussion in Peircean terms, see Short 2007). There are two important consequences. First, purposes or functions are types of physical events, not tokens. Hence, they are described as concepts. Second, these concepts define the structure of the state space in a contingent way, i.e. independent from universally valid physical laws : there is no physical law that explains why hearts pump blood. The shapes of the screw and the matching shape of the nut are types that manifest their function, and that can be actualized in an infinite number of tokens of many different materials and non-functional varieties.

John Searle (1996) has argued that the analysis of functions depends on the observer, just as in our previously established interpretation of the screw as a message connecting sender and receiver. Accordingly, this has always raised the issue of whether final causes must relate to a ‘designer’ or an entity with ‘intentions’, such as God in theologically inspired versions of teleology. But after Darwin, we know that finality of evolution can emerge endogenously as a purely physical phenomenon (as Deacon 2013 elaborates systematically in his distinction between ‘morphodynamics’ and ‘teleodynamics’). We can reconcile this with Searle’s argument on functions if we recognize that ‘observers’ are not disembodied, but physical systems that relate to other physical systems. (For a most general framework, see Wolpert 2001.) There is a famous physical thought experiment that exactly pinpoints this issue, namely, Maxwell’s demon sorting the molecules in a container, allegedly violating the Second Law. I will use this parable as a setting to unfold a physical semiotics of information (cf. more detailed, Maroney 2009).

The demon was introduced for presenting an argument why information processing may result in physical states that can produce work without previously expending energy, hence producing entropy. The demon is an observer, and the upshot of the complex debates about the parable is that observation requires physical interaction with the gas molecules, i.e. measurement. It also requires storing information about past measurements to inform further interventions, which includes deleting obsolete information to free up storage space recurrently. The demon pursues an intention, namely sorting the molecules according to different speeds, aiming at a physical state that can generate work via the resulting temperature gradient between the two compartments of the container. The crucial point concerning thermodynamics is that the demon might be able to sort the molecules, which only apparently violates the Second Law, as the demon is not disembodied (as the term ‘demon’ deliberately suggests to guide readers on the wrong track). Instead, it consumes energy by its activity (measuring, information processing and storing, moving the separator). By implication, the ensemble of observer and container manifests the working of the Second Law, relative to the environment of the ensemble.

We can interpret all living systems of any complexity, beginning with the simplest original self-replicating molecules, as observers in this sense (Elitzur 2005; Ben Jacob et al. 2006). We can even expand this view to all kinds of physical objects : every physical object embodies the causal sequences that have determined its current states. Accordingly, it could be conceived as observing the environment in which these causal forces originated. (This is called ‘environmental information’ in information theory; Floridi 2019). This enables human observers to do experiments, in which the conditions of interactions are controlled. However, this is only a special case of a universal phenomenon, which has been dealt with in those approaches to causation that treat causation as a transfer of physical information (e.g. Collier 1996). It is a minority view in the Philosophy of Science, unfortunately neglected in Hulswit (2002), but highly compatible with his semiotic approach to causation. A stone lies on the ground. It does not float in the air. All this embodies information about the physical laws, the constraints, and the causal sequences that explain its emergence. This view is in fact not alien to the modern sciences since all sciences that cannot rely on experiments must reason in this way, such as the geosciences, which indeed deal with stones (Baker 1999). Current geomorphology is treated as a ‘message’ that must be decoded. Its sender is ‘mother nature’. The scientists decode the information to reconstruct the causal sequences that explain current observations.

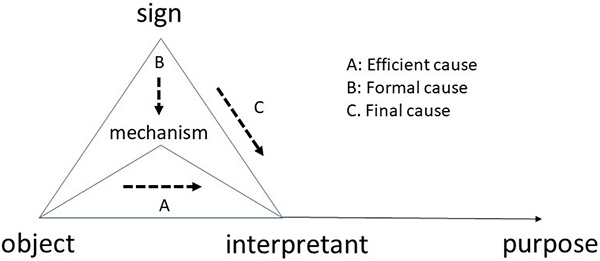

This is the basis for a physical interpretation of Peirce’s semiotics. Considering the demon again, it relates to the object, the gas, in doing measurements. A measure is a sign, which focuses on certain aspects of the object, in this case, location and momentum : this corresponds to the specific conceptualization of the state space of the gas, which in turn relates to the demon’s intentions, sorting the molecules to achieve a physical effect. Before I develop the details of demon’s semiotics, I present the fundamental semiotic structure as a reference frame (Fig. 1), which is a standard graphical approach in the literature (e.g. El-Hani et al. 2002; Brier 2008). I posit that the unit of physical information is the semiotic triad that enhances the Shannon concept of information to a full-fledged concept of semantic information in which sender and receiver are no longer external but are essential aspects of information. The triad established the distinction of sign, object, and interpretant. The object is the ‘sender’ of the information, the receiver is its interpretant, whereas the sign mediates between the two. With Peirce, I refer to this as semiotic determination or ‘causation’ (Parmentier 1985 : 27). Causation can be differentiated into the three modes of efficient, formal and final causes. It is an integrated dynamical process. Peirce does not speak of formal causes, but as Hulswit (2002) has shown, a crucial aspect of Peirce’s view on semiotic causation is that ‘form’ is transmitted from object to interpretant, with signs operating as natural kinds, which relate objects to final causes. I adopt to this view of the process of a ‘formal cause’. The triad is bimodal (two embedded triangles) (Herrmann-Pillath 2013). There is an efficient causal mechanism that relates object and interpretant, but the purposiveness of this relation is only established via the intermediation of the sign.

Fig. 1

The Semiotic Triad as Unit of Physical Information (Semantic Information)

Accordingly, I analyse the semiotic triad in the following way, illustrating it with the example of a rabbit interacting with a snake. The snake is the object. Rabbits respond to signs of snakes, such as specific kinds of noise or movements. A certain movement in the grass indicates the presence of the snake. Rabbits have been evolutionarily selected for avoiding snakes. Movement and response are efficient-causally related, via the physics of vision, which does not yet explain why only a specific kind of movement elicits escape response. (Cf. the respective discussion in teleosemantics; e.g. Neander 2006,) The sign is specific to snakes as natural kinds (a species in biology). That is, the sign is a formal cause that selects a certain type of observational interactions between object and interpretant from all possible kinds, such as movements of frogs in the grass. However, we can only explain this with reference to the final cause, which is the interpretant, i.e., the rabbit and the functional requirements of survival and reproduction. There is no way to distinguish between frogs and snakes on grounds of mere efficient-causal interaction with rabbits.

3.3. A Conceptual Model of Causal Modes in Semiotics

We can generalize over this model of the semiotic triad as a unit of physical information if we refer it to Maxwell’s demon (Fig. 2). Measurements are signs that trigger the demon’s actions, i.e. the sorting, just as the rabbit sorts incoming sensory data from the environment. Now, most significantly, the sorting does not directly work on the molecules, but via the separator. The separator is a macro-phenomenon relative to the micro-level of the Brownian motion, i.e., it introduces a constraint manipulated by the demon. The action results in a compression of the faster molecules in one part of the container, which is in turn a macro-state. For the demon, the location and momentum of the molecules in the two parts after separation does not matter at all anymore. Hence, the result of the action only relates to the macro-state. Let us assume that the demon aims at generating work that maintains its own function, i.e. the sorting. (Think of a steam engine, a circular process fed by energy inputs.) This amounts to what Stuart Kauffman (2000) has called an ‘autonomous agent’, i.e. a structure that maintains itself via realizing thermodynamic work cycles. We can approach Maxwell’s demon as an autonomous agent of which the rabbit is just another example.

Fig. 2

Demon’s Semiotics

Object and interpretant are related through chains of efficient causality. The demon measures molecules, which is a causal interaction. The final state of the gas remains determined causally by the Brownian motion of the molecules, as the demon only moves the separator. The interpretant is the new macro-state, which is, physically, the assemblage of demon and container divided into two compartments with molecules of different speed. The demon’s action of moving the separator responds to the signs of the objects, i.e. the measurements. The signs are ambivalent, properties of the objects, but ‘assigned’ by the act of interpreting those properties, and they inform the sorting. This corresponds to measurement as a formal cause. A formal cause imposes a pattern on the processes driven by efficient causes, ultimately referring to the purpose of the sorting, i.e., the final cause. This distinction between the micro- and the macrolevel is the physical equivalent to the Peircean ontological distinction between individual and form related to finality, hence his conceptual realism of signs (Hulswit 2002).

As we can see immediately, the new macro-state embodies physical information, even in the Shannon and Boltzmann sense, in terms of being ordered and reflecting the result of the sorting. We now recognize the connection between thermodynamics and information in the physical sense. The ordered state corresponds to a state with lower entropy, which enables work. The demon could just pull out the separator. The energy would only dissipate in the container, and the molecules mix again. However, imagine the separator would be movable by the kinetic energy of the faster molecules : moving the separator is physical work. Whether work is generated or not depends on the specific macro-structure of the arrangement (Atkins 2007).

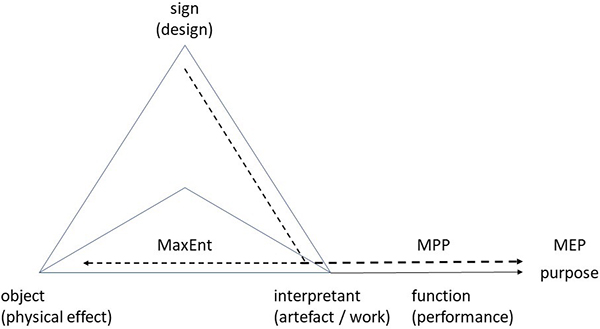

Fig. 3

Micro- and Microstate Transitions in Demon’s Semiosis : A : Efficient Cause, B : Formal Cause, C : Final Cause

In Figure 3, I show the complete picture. It includes Peirce’s important idea that interpretants turn into objects in chains of semiotic processes. The result of the sorting, the macro-state of sorted molecules, can be measured in terms of macro-properties, i.e. the difference in temperature of the two compartments of the container. The demon can now calculate the amount of work that could be generated and can design a mechanical device that is driven by that work. This is the second-order interpretant. Now imagine that this work could be used by the demon to enable his measurements as in the first step. That would smack of a perpetuum mobile, but of course, the first circle of sorting would need to be pushed by an exogenous energy input. Furthermore, there is a subsequent loss of information when the demon has created the macro-state without knowing the micro-state ex post anymore. Hence, without feeding new energy into the system, the demon will lose its capacity to measure the microstates more and more. The Second Law in classical thermodynamics corresponds to a ‘Law of Diminishing Information’ (Kåhre 2002).

So far, we have established the basic connections between thermodynamics and information that emerge from analysing Maxwell’s demon as a semiotic process in three interacting causal modes. In principle, we can substantiate this argument with a full formal and mathematical framework of the thermodynamics of information as established by Kolchinsky & Wolpert (2018). Information is no longer conceived in terms of Shannon information, but in terms of work generated in ordered states that manifest thermodynamic gradients. What is missing is to make sense of the inherent reference to ‘intention’ in the figure of the demon. To render this in a more precise thermodynamic framework, I suggest building on the recent developments of the maximum entropy hypothesis (following Herrmann-Pillath 2013).

3.4. Enter the Maximum Entropy Framework

There is an interesting connection to economics in E.T. Jaynes’s mathematical framework for the maximum entropy concept, which debunked Georgescu-Roegen’s thermodynamic reasoning (Jaynes 1982), although Jaynes himself did not apply the concept to the economy or to physical systems in general, only on the theory of probability and inference (Jaynes 2003). In its discussion, I will distinguish between the ‘MaxEnt’ and the ‘Maximum Entropy Production’ MEP approaches (e.g. according to Dewar 2005, 2009). MaxEnt is the original Jaynes approach. It describes an inference procedure as it is practiced in econometrics, for example. If we face informational constraints regarding the microstates of a system, we identify the constraints under which the system operates and predict its future macro-states in assuming that given constraints, the final state will maximize entropy of micro-states. This is the state with the highest probability, given constraints. If we interpret the constraints as hypotheses that we formulate about a system, this can be regarded as a formalization and specification of Peirce’s notion of abduction as the only form of inference that can produce new information, compared to induction and deduction. In an abductive inference, we posit various hypotheses and check which one renders the observations most plausible and closest to being the most likely one. It is worth noticing that this corresponds to the so-called ‘minimum description length’ approach in the theory of computational data compression, which minimizes the sum of the Shannon information in the algorithm that describes the hypothesis and in the algorithm that maps data into the hypothesis, a kind of Ockham’s razor. The hypothesis must be as parsimonious as possible, and the state of the affairs must be most plausible, i.e., most probable (Adriaans 2019). In this sense, the two general notions of efficiency and maximization of entropy are combined.

As an inference mechanism, MaxEnt clearly refers to an observer, hence Jaynes champions the subjective interpretation of probability. Now, the question is whether there could be a real-world correspondence in terms of thermodynamics of physical processes. The basis for this idea was first prepared in the geosciences (overview in Kleidon 2009). The climate is an extremely complex system, so, could we predict its future changes by identifying relevant constraints and by assuming that the final state will maximize entropy? If so, would that not just be an inference procedure, but also reveal factual physical processes of maximizing entropy under constraints? This is the MEP hypothesis : The observer’s inference corresponds to the physical process that is observed.

The MEP hypothesis is physically meaningful once we consider complex open, non-linear and non-equilibrium systems such as the climate, but also living systems, in particular (Martyushev & Seleznev 2006; Dewar 2010; Martyushev 2020). Such systems will tend to maximize energy throughputs and thereby maximize entropy production in the ensemble of system and environment. This goes together with minimizing entropy of the realized states, which can be interpreted as increasing the efficiency of processing the throughputs (cf. Fath et al. 2001). This is exactly the correspondence with the minimum description length approach in data compression. In this general sense, the MEP hypothesis can be related to other theories like the Constructal Law, which states that systems assume structural states that maximize flows through the system (Bejan & Llorente 2010).

However, coming back to the demon, can we reasonably assume that the demon will optimize its measurements according to MaxENT? Will he thus maximize entropy production in the ensemble of container and demon? If we approach the demon as autonomous agent, this assumption could only be defended if we consider a population of demons that compete over generating the best hypotheses and the best-designed devices to solve the problem of generating work via sorting, and which would be subject to selection according to performance. Indeed, this would be the adequate physical complement to the principle of abduction, which requires the generation and testing of a large variety of hypotheses, as suggested in theories of evolutionary epistemology (Popper 1982). In other words, we would assume a process of natural selection of demons, and only on the population level would we assume that the entropic principles would materialize. By implication, this means that the relationship between sign and final cause will be determined on the population level, though being embodied on the individual level. Indeed, this matches with the distinction of individual and species in evolutionary biology, where we observe a long history of debates over the ontological status of the two concepts of individual and species (Ereshevsky 2017). In our Peircean perspective, this corresponds to distinguishing between the object and sign and the different causal modes that determine the interaction between object and interpretant.

As a result, we can reinstate Lotka’s maximum power principle MPP as the necessary mediation of the MEP, as it is also done in related discussions on the MEP in the Earth system sciences. The MPP establishes the conceptual link between any kind of process of selection governed by thermodynamic imperatives and the maximum entropy production principle, as originally argued by Lotka : MPP is a specification for thermodynamics in the context of systems that operate according to the principle of natural selection.

The universal triadic relationship between the three principles is depicted in Figure 4. This completes the basic model that we can now apply to technological evolution in order to show how information and thermodynamic imperatives work together in this case.

Fig. 4

Maximum Entropy and Semiosis

4. Physical Information in Technological Evolution

4.1. Techno-Semiosis

What are the implications for economics? I concentrate on one topic, the role of technology and design. Again, I just sketch the argument. We can understand technological evolution as the accumulation of semantic information governed by thermodynamic principles. This is grounded in the evolutionary approach to innovation as championed by Brain Arthur (2009) and other evolutionary economists (see the collection of seminal papers in Ziman 2000). This approach posits that technological innovation proceeds via the recombination of existing elements in a space of possible combinations. The selection criterion is to solve certain problems posed by the environment, given the goals of human actors. Thus, technological evolution is not driven by individual genius. It is a population-level phenomenon, where myriads of actors act on an open state space of technology which is occupied by certain existing objects continuously reshuffled and recombined until an actor recognizes a solution to a problem, so that a new object is fixed. Most importantly, this endogenizes the state space, as the new object represents a new state and can recombine with other existing objects to generate another new object, and so forth. Hence, the information embodied in technology grows in two senses. First, the number and complexity of types of combinations grows, and second, this set of realized combinations grows slower than the space of possible states expands via the creation of new objects. (On this important point, see Brooks & Wiley 1988; and Chaisson 2001).

Now, the important point is that many economic approaches to technological innovation focus on the production side, as growth theory just distinguishes between production and consumption. That is different in the management sciences, where user knowledge has for long assumed an important role in understanding innovation. The semiotic perspective even further emphasizes the user perspective : this is simply the implication of stating that engineering is about problem solving, as problems are always user problems, including the producer who uses technology for production (Petroski 1996). Eventually, all actors in the economy are users.

I posit the following abstract view on technological evolution. We consider the evolution of technological objects in what is primarily a space of random recombination even if intentions are partly involved. However, without knowing the solution in advance experimentation is ‘trial and error’, with error manifesting the inherent randomness of the process (Levinson 1988). Vis-à-vis this process, observations are made that categorize the objects according to a certain criterion. This is the sign in terms of certain macro-states : design. The ‘de-sign’ relates to the interpretant and the user of the object. Based on the design, the user infers information about the object that allows her to actualize a certain intention or purpose that can be fulfilled by the object, i.e. the solution to the original problem that triggered the technological evolution. This basic relationship is a clear instance of semiosis as analysed in the previous section (Fig. 5).

Fig. 5

Techno-Semiosis

Every technology harnesses a physical effect, hence triggers an efficient cause in generating its uses via a certain mechanism. However, this depends on imposing certain constraints on the range of possible physical variations of mechanism that define the state space of technology. These constraints are the design of the mechanism. Design, however, is not arbitrary, because it enables use : hence, design is a sign that mediates user inferences of how to use the artefact. This implies that users may infer potential uses that were not even intended by the producer : therefore, in the semiotic view, user knowledge is crucial, because it determines which information is inferred about the object as physical effect. In structuring the mechanism, design is a formal cause (even in the original Aristotelian sense as the sculptor’s design of the marble). However, the meaning of the sign is determined by the final cause, i.e. the uses of the artefact that fulfil a certain function. In a nutshell, ‘technology’ is not the artefact, but the entire semiotic triad conjoining a physical effect and its function.

Compared to the standard economic approach, the direction of causation is reversed since it is not primarily the producer who discovers and applies new information. It is the interpretant where capacities of artefacts are discovered, mediated, and eventually embodied in the design of the technology. Hence, again the full significance of this view only unfolds if we conceive this semiotic triad as reflecting evolution on the population level. In this case, we certainly consider populations of artefacts in the state space of technology (such as possible variants of molecules) as well as populations of users who explore potential uses. In this setting, we also envisage functions as evolving with the continuous exploration of the state space by the users, thus enabling phenomena such as exaptation, i.e. the discovery of new uses in pre-existing alternative contexts. Famous examples include the use of dysfunctional adhesive in post-its.

Think of the history of bicycles. Bicycles emerged from the recombination of previously existing elements, such as wheels and seats. As we know from the historical record, there was a large space of potential combinations, which was explored by producers in a trial and error process, gradually moving to improved designs. Producers and users can be merged in the inventor using the artefact himself, testing its features. On the mere mechanical level, the action of riding the bicycle is directly determined by efficient causality through the properties of the object harnessing physical effects. This corresponds to the micro-states, as inventors would not fully know and understand the detailed causal mechanisms that determine, for instance, the stability of the vehicle at a certain speed. In addition, there is a range of variations of those mechanisms compatible with the same macro-state, i.e. certain performances in use. Now, the point is that design imposes a structure on these micro-states that can no longer be understood as efficiently caused. The design relates the micro-state with purposes, or functions, and in this sense it allows the users to gain information about the object. Via the user and interpretant, the object embodies information that goes beyond the mechanical aspects of generating the performance. The entire evolutionary trajectory results in a growing space of possible variants of bicycles. This includes an increasing variety of non-functional variants, such as certain colours, which, however, may sometimes nevertheless assume functional value, such as marking bicycles by colour in bike-sharing arrangements.

4.2. MEPping Technology

Now, consider the demon again. The demon represents a technology. The physical effect is Brownian motion. It generates a variety of states of single gas molecules, which creates the possibility of sorting. The demon designs a mechanism of sorting that applies a measurement, thus operating a design that governs the sorting, i.e. the interpretant, resulting in the capacity to generate work. Any innovator acts like the demon in trying to sort out combinations of elements (‘molecules’) that produce a certain performance (‘speed’). Thereby, they embody information in the resulting macro-states, i.e. the design of the technology (the partitioning of the container into two parts). The design results in thermodynamic states in which work can be generated, and free energy is not simply dissipated into heat. Accordingly, we must assume, as Georgescu-Roegen had anticipated, but not elaborated, that technological evolution follows the Second Law. This is not the same, however, as Georgescu-Roegen did, as to state that the Second Law is a mere physical constraint of technological evolution and economic growth. To the contrary, technological evolution is a manifestation of the Second Law. This becomes evident when we adopt the Maximum Entropy perspective on technology (Haff 2014).

Fig. 6

Physical Techno-Semiosis

If we refer this to the maximum entropy frame, we can say that technological evolution maximizes entropy in the MaxEnt sense (Fig. 6). This becomes apparent in the explosion of the quantity of bicycle variants, a process that continues unabated until today. The process involves two major characteristics : first, an increasing variety of designs that operate as constraints on the variation, and second, the simultaneous growth of individual variations. The increasing variety of designs means that the original physical effect, as embodied in the ‘Ur-bicycle’, is increasingly loaded with information. One specific design attains its informational load via the differentiation to other designs, such as the distinction between an off-road bike and a city bike. The information relates to the interpretant, i.e. the increasing scope of uses of bicycles.

Relative to the purposes of evolving bicycles, we can also say that the Maximum Power principle applies, namely, in two senses. One is the growth of the number of tokens of design types, as the growth of ‘technomass’. The other is improving the performance of the designs in terms of physical work transformed and generated at maximum efficiency.

The semiotic analysis shows that we cannot fully understand technology in terms of artefacts but need to conceptualize the population level, analogous to the biosphere as the level where evolutionary processes take place, which actualize the thermodynamics of the growth of information. This is the concept of the technosphere (Haff 2012; Herrmann-Pillath 2013, 2018). In practical terms, this means that in understanding the relationship between efficiency and ecology, we must avoid evaluating technology only on the artefactual level, i.e. by assuming that more efficient technologies will also contain the thermodynamics of growth. MPP and MEP are driven by population-level dynamics. They show in the trajectories of technologies and their ecosystems. In terms of the specific economic mechanisms, this has been recognized in the empirics of so-called ‘rebound effects’ discovered by Jevons in his discussion of the ‘coal question’. Increasing efficiency will eventually speed up the consumption of energy. (For a survey, see Azevedo 2014.)

To summarize this section, we have now a concise semiotic conceptualization of Ayres’s SR-information as information embodied in technology with reference to functions that artefacts fulfil in an environment of human actors using artefacts in engaging with the problems they face. Lotka (1922b) already anticipated a fascinating conclusion, namely, that human intentions can only be conceived as proximate causes of technological evolution. Ultimate causes are determined by the physical laws that govern the dissipation of energy through technology and which must be conceptualized in the three causal modes of the semiotic triad. As Georgescu-Roegen argued, humans cannot overcome the Second Law. We can now make an even stronger statement. Technological evolution is a manifestation of the Second Law. The generation and accumulation of information is the other side of the physical coin of dissipation of energy in open non-linear non-equilibrium systems, such as the human economy. Information is physical.

5. Conclusion

Approaching information as a physical phenomenon requires adopting a new view on what ‘physical’ means. This was already achieved in the work by Charles Sanders Peirce, and it is time to follow authors such as Deacon (2013) in bringing in the rich intellectual harvest from applying Peirce’s thinking in specific scientific domains, as has been done in biosemiotics in the recent decades. What are the implications for practical issues, primarily, of course, in meeting the challenge of climate change?

If the analysis is correct that the accumulation of physical information follows the laws of thermodynamics, the hope is forlorn that we can solve our dilemmas by technological progress in an unspecified way. Technosphere evolution will inexorably move towards the direction of maximizing the production of entropy on the level of the Earth system. However, that does not necessarily imply catastrophe. In the introduction, I cited the optimistic views that we could re-design technology in a way that mimics the biosphere, basically, in terms of a circular economy fed by solar energy (Kleidon 2016). However, this remains a highly abstract idea unless we tackle the serious and difficult questions of how to coordinate the evolution of the biosphere and the technosphere, even though both obey the same physical principles of evolving information. These questions require moving to a higher conceptual level, i.e. the Earth system view.

This view bears its own risks, as it might inspire new extensions of technology, such as in geoengineering the climate (Morton 2013). There is a principled philosophical issue here since the ‘systems’ notion suggests this kind of mechanistic approach, pretending a degree of coherence and computability which simply does not exist (Latour 2015). This has even political consequences, for example, in putting all bets on the benevolent action of a ‘climate leviathan’ (Wainwright & Mann 2018). To the contrary, one crucial consequence of moving to the metalevels of biosphere and technosphere evolution is to acknowledge the fundamental limits to human capacities of control and design. There are spots of a beginning that are easy to identify. For example, even ecologically minded economists often maintain the position that the economy centres on human welfare (Llavador et al. 2015). This must be discarded in favour of an Earth-centred normative frame. This is the position of so-called ‘deep ecology’ approaches in Ecological Economics.

In practical terms, this means that we need to change our decision systems in a way that represents the entire range of ‘interests’ in the Earth system because only this can result in creative solutions for coordinating the biosphere and the technosphere. As long as we define this coordination only in terms of human interests, we cannot discover ways to reach sustainability. This has an interesting correlate on an abstract level. We need to find ways to include information embodied in the biosphere in human information processing, that is, in terms of its immanent meanings as functions. This cannot only proceed from our position of human observers. It means to semiotically reconstruct the whole range of ‘Umwelten’ of all agents in the biosphere. This program of ‘zoosemiotics’ has already been launched by a few scholars. It goes back to the seminal work of Jakob von Uexküll (Maran et al. 2016). Such shifts of perspectives cannot only be achieved by standard approaches of science trained in the straightjacket of efficient causality. It must be enriched by views from many other disciplines, especially the humanities and by all kinds of artistic creativity (Wheeler 2016; Herrmann-Pillath 2019). This amounts to a radical re-conceptualization of economics, which, however, seems feasible once we adopt the semiotics view. Semiotics is the most powerful conceptual frame that can re-integrate the two worlds that gradually separated in the course of the 19th century, the sciences and the humanities aka philosophy. Unfortunately, scholars are still few who work on semiotic approaches to economics that adopt the internal view of the discipline and apply this on ecological issues (for notable exceptions, Hiedanpää & Bromley 2016).

For economics, all this results in a surprising turn. Economics has always aspired at becoming as ‘scientific’ as possible. However, as Georgescu-Roegen seminally argued, economics has failed to acknowledge the relevance of even most elementary physics in its theoretical constructs. As we have seen, the core question is the physical status of information, where mainstream economics is still blinded by its implicit Cartesian dualism. Yet, the move towards recognizing the physical nature of information also means to restore the role of meaning as finality in economics, and therefore suggests a reconsideration of the role of the humanities in creating a sustainable economy.

Appendices

Biographical note

CARSTEN HERRMANN-PILLATH is Professor and Permanent Fellow at the Max Weber Centre for Advanced Cultural and Social Studies, University of Erfurt, Germany. His major fields of research are economics and philosophy, institutional change and economic development, international economics and Chinese economic studies. His publications include 500+ academic papers and 18 books, covering a broad cross-disciplinary range in economics, the humanities and the sciences. After completing education in economics, linguistics and sinology at the University of Cologne (1988), he assumed Professorships in economics, evolutionary and institutional economics and Chinese economic studies at Duisburg University, Witten/Herdecke University and the Frankfurt School of Finance and Management (2008-2014) and taught at many universities, including the Universities of Bonn, Tübingen, St. Gallen, ETH Zürich, Tsinghua University and Beijing Normal University.

Bibliography

- ADRIAANS, P. (2019) “Information”. In The Stanford Encyclopedia of Philosophy (Spring 2019 Edition), E.N. Zalta (Ed.), https://plato.stanford.edu/archives/spr2019/entries/information

- ANNILA, A., and SALTHE, S.N. (2010) “Physical Foundations of Evolutionary Theory”. In Journal of Non-Equilibrium Thermodynamics (35) : 301-321.

- ARTHUR, W. B. (2009) The Nature of Technology : What It Is and How It Evolves. New York, NY : Free Press.

- ATKINS, P. (2007) Four Laws That Drive the Universe. Oxford : Oxford University Press.

- AZEVEDO, I.M.L. (2014) “Consumer End-Use Energy Efficiency and Rebound Effects”. In Annual Review of Environment and Resources (39) : 393-418.

- AYRES, R.U. (1994) Information, Entropy, and Progress : A New Evolutionary Paradigm. New York, NY : AIP Press.

- AYRES, R.U., and WARR, B. (2009) The Economic Growth Engine : How Energy and Work Drive Material Prosperity. Cheltenham : Edward Elgar.

- BAKER, V. (1999) “Presidential Address : Geosemiotics”. In GSA Bulletin (111)5 : 633-645.

- BEJAN, A., and LORENTE, S. (2010) “The Constructal Law of Design and Evolution in Nature”. In Philosophical Transactions of the Royal Society B (365) : 1335-1347.

- BEN JACOB, E., SHAPIRA Y. and TAUBER A. I. (2006) “Seeking the Foundations of Cognition in Bacteria : From Schrödinger’s Negative Entropy to Latent Information”. In Physica A (359) : 495-524.

- BRIER, S. (2008) Cybersemiotics : Why Information Is Not Enough! Toronto : University of Toronto Press.

- BROOKS, D.R., and WILEY, E.O. (1988) Evolution as Entropy : Toward a Unified Theory of Biology. Chicago, IL : Chicago University Press.

- CHAISSON, E.J. (2001) Cosmic Evolution : The Rise of Complexity in Nature. Cambridge, MA : Harvard University Press.

- COLLIER, J. (1996) “Causation Is the Transfer of Information”. In Causation, Natural Laws and Explanation. H. Sankey (Ed.), Dordrecht : Kluwer : 279-331.

- DEACON, T.W. (2013) Incomplete Nature : How Mind Emerged from Matter. New York, NY : Norton.

- DEWAR, R.C. (2005) “Maximum-Entropy Production and Non-Equilibrium Statistical Mechanics”. In Non-Equilibrium Thermodynamics and the Production of Entropy : Life, Earth, and Beyond. A. Kleidon & R. Lorenz (Eds.), Heidelberg : Springer : 41-55.

- DEWAR, R.C. (2009) “Maximum Entropy Production as an Inference Algorithm that Translates Physical Assumptions into Macroscopic Predictions : Don’t Shoot the Messenger”. In Entropy (11) : 931-944.

- DEWAR, R.C. (2010) “Maximum Entropy Production and Plant Optimization Theories”. In Philosophical Transactions of the Royal Society B (365) : 1429-1435.

- EL-HANI, C.N., QUEIROZ, J., and EMMECHE, C. (2006) “A Semiotic Analysis of the Genetic Information System”. In Semiotica (160) : 1-68.

- ELITZUR, A.C. (2005) “When Form Outlasts Its Medium : A Definition of Life Integrating Platonism and Thermodynamics”. In Life as We Know It. J. Seckbach (Ed.), Dordrecht : Kluwer : 607-620.

- EMMECHE, C. (2002) “The Chicken and the Orphan Egg : On the Function of Meaning and the Meaning of Function”. In Sign System Studies (30)1 : 15-32.

- ERESHEFSKY, M. (2017) “Species”. In The Stanford Encyclopedia of Philosophy (Fall 2017 Edition), E.N. Zalta (Ed.), https://plato.stanford.edu/archives/fall2017/entries/species/ (accessed, April 25, 2020).

- FATH, B., PATTEN, B.C., and CHOI, J.S. (2001) “Complementarity of Ecological Goal Functions”. In Journal of Theoretical Biology (208) : 493-506.

- FLORIDI, L. (2019) “Semantic Conceptions of Information”. In The Stanford Encyclopedia of Philosophy (Winter 2019 Edition), E.N. Zalta (Ed.), https://plato.stanford.edu/archives/win2019/entries/information-semantic/ (accessed, April 25, 2020).

- GEORGESCU-ROEGEN, N. (1971) The Entropy Law and the Economic Process. Cambridge, MA : Harvard University Press.

- HAFF, P.K. (2012) “Technology and Human Purpose : The Problem of Solids Transport on the Earth’s Surface”. In Earth System Dynamics (3) : 149-156.

- HAFF, P.K. (2014) “Maximum Entropy Production by Technology”. In Beyond the Second Law. Entropy Production in Non-equilibrium Systems. R.C. Dewar, C.H. Lineweaver, R.K. Niven, & K. Regenauer-Lieb (Eds.), Heidelberg : Springer : 397-414.

- HERRMANN-PILLATH, C. (2013) Foundations of Economic Evolution : A Treatise on the Natural Philosophy of Economics. Cheltenham : Edward Elgar.

- HERRMANN-PILLATH, C. (2014) “Energy, Growth and Evolution : Towards a Naturalistic Ontology of Economics”. In Ecological Economics (119) : 432-442

- HERRMANN-PILLATH, C. (2018) “The Case for a New Discipline : Technosphere Science”. In Ecological Economics (149) : 212-225.

- HERRMANN-PILLATH, C. (2019) “The Art of Co-creation : An Intervention in the Philosophy of Ecological Economics”. In Ecological Economics, https://doi.org/10.1016/j.ecolecon.2019.106526 (accessed September 2020).

- HERRMANN-PILLATH, C. and SALTHE, S.N. (2011) “Triadic Conceptual Structure of the Maximum Entropy Approach to Evolution”. In BioSystems (103) : 315-330.

- HIDALGO, C.A. (2016) Why Information Grows : The Evolution of Order, from Atoms to Economies. London : Penguin.

- HIEDANPÄÄ, J., and BROMLEY, D.W. (2016) Environmental Heresies : The Quest for Reasonable. London : Palgrave Macmillan.

- HULSWIT, M. (2002) From Cause to Causation : a Peircean Perspective. Dordrecht : Kluwer.

- INTERNATIONAL ENERGY AGENCY (2020) “Digitalisation : Making Energy Systems More Connected, Efficient, Resilient and Sustainable”, https://www.iea.org/topics/digitalisation (accessed April 30, 2020).

- JAYNES, E.T. (1982) “Comments on ‘Natural Resources : The Laws of Their Scarcity’ by N. Georgescu-Roegen”. http://bayes.wustl.edu/etj/articles/natural.resources.pdf (accessed April 26, 2020).

- JAYNES, E.T. (2003) Probability Theory : The Logic of Science. Cambridge : Cambridge University Press.

- KÅBERGER, T. and MÅNSSON, B. (2001) “Entropy and Economic Processes : Physics Perspectives”. In Ecological Economics (36) : 165-179.

- KÅHRE, J. (2002) The Mathematical Theory of Information. Boston, MA : Dordrecht & London : Kluwer.

- KAUFFMAN, S.A. (2000) Investigations. Oxford : Oxford University Press.

- KLEIDON, A. (2009) “Non-Equilibrium Thermodynamics and Maximum Entropy Production in the Earth System : Applications and Implications”. In Naturwissenschaften (96) : 653-677.

- KLEIDON, A. (2016) Thermodynamic Foundations of the Earth System. Cambridge : Cambridge University Press.

- KOLCHINSKY, A. and WOLPERT, D.H. (2018) “Semantic Information, Autonomous Agency and Non-Equilibrium Statistical Physics”. In Interface Focus (8) : 20180041

- KÜMMEL, R. (2013) The Second Law of Economics. Energy, Entropy, and the Origins of Wealth. Heidelberg : Springer.

- LADYMAN, J., and ROSS, D., with SPURRETT, D. and COLLIER J. (2007) Every Thing Must Go : Metaphysics Naturalized. Oxford : Oxford University Press.

- LATOUR, B. (2015) Face à Gaïa : Huit conférences sur le nouveau régime climatique. Paris : Découverte.

- LEVINSON, P. (1988) Mind at Large : Knowing in the Technological Age. Greenwich, CT : JAI Press.

- LLAVADOR, H., ROEMER, J.E., and SILVESTRE, J. (2015) Sustainability for a Warming Planet. Cambridge, MA : Harvard University Press.

- LLOYD, S. (2006) Programming the Universe : A Quantum Computer Scientist Takes on the Cosmos. New York, NY : Knopf.

- LLOYD, S. (1922a) “Contribution to the Energetics of Evolution”. In Proceedings of the National Academy of Sciences (8) : 147-151.

- LLOYD, S. (1922b) “Natural Selection as a Physical Principle”. In Proceedings of the National Academy of Science (8) : 151-154.

- MARAN, T., TØNNESSEN, M., TÜÜR, K., MAGNUS, R., RATTASEPP, S., MÄEKIVI, N. (2016) “Methodology of Zoosemiotics : Concepts, Categorisations, Models”. In Animal Umwelten in a Changing World : Zoosemiotic Perspectives, T. Maran et al. (Eds.). Tartu : University of Tartu Press.

- MARONEY, O. (2009) “Information Processing and Thermodynamic Entropy”. In The Stanford Encyclopedia of Philosophy (Fall 2009 Edition), E.N. Zalta (Ed.), https://plato.stanford.edu/archives/fall2009/entries/information-entropy/ (accessed April 27, 2020).

- MARTYUSHEV, L. M. (2020) “Life and Evolution in Terms of the Maximum Entropy Production Principle”. In Preprints 2020, 2020050017 (doi : 10.20944/preprints202005.0017.v1).

- MARTYUSHEV, L. M., and SELEZNEV, V.D. (2006) “Maximum Entropy Production Principle in Physics, Chemistry and Biology”. In Physics Reports (426) : 1-45.

- MORTON, T. (2013) Hyperobjects : Philosophy and Ecology after the End of the World. Minneapolis, MI : Minneapolis University Press.

- NEANDER, K. (2006) “Content for Cognitive Science”. In Teleosemantics : New Philosophical Essays. G. Macdonald & D. Papineau (Eds.), Oxford : Oxford University Press : 167-194.

- ODUM, H.T. (2007) Environment, Power, and Society for the Twenty-First Century : The Hierarchy of Energy. New York, NY : Columbia University Press.

- OYAMA, S. (2001) The Ontogeny of Information : Developmental Systems and Evolution. Durham, NC : Duke University Press.

- PARMENTIER, R.J. (1985) “Signs’ Place in Medias Res : Peirce’s Concept of Semiotic Mediation”. In Semiotic Mediation, E. Mertz & R.J. Parmentier (Eds.). Orlando, FL : Academic Press : 23-48.

- PETROSKI, H. (1996) Invention by Design : How Engineers Get From Thought to Think. Cambridge, MA : Harvard University Press.

- POPPER, K.R. (1972) Objective Knowledge. An Evolutionary Approach. Oxford : Clarendon.

- POPPER, K.R. (1993) Development and Evolution : Complexity and Change in Biology. Cambridge, MA : MIT Press.

- POPPER, K.R. (2007) “Meaning in Nature : Placing Biosemiotics in Pansemiotics”. In Biosemiotics : Information, Codes and Signs in Living Systems. M. Barbieri (Ed.), New York, NY : Nova Publishers : ch. 10.

- POPPER, K.R. (2009) “The System of Interpretance : Meaning as Finality”. In Biosemiotics (1) : 285-294.

- SCIUBBA, E. (2011) “What Did Lotka Really Say? A Critical Assessment of the ‘Maximum Power Principle’”. In Ecological Modelling (222) : 1347-1353.

- SEARLE, J. R. (1995) The Construction of Social Reality. New York, NY : Free Press.

- SHORT, T. L. (2007) Peirce’s Theory of Signs. Cambridge : Cambridge University Press.

- VOLKENSTEIN, M.V. (2009) Entropy and Information. Basel : Birkhäuser.

- WAINWRIGHT, J., and MANN, G. (2018) Climate Leviathan : A Political Theory of Our Planetary Future. London : Verso.

- WHEELER, W. (2016) Expecting the Earth : Life, Culture, Biosemiotics. London : Lawrence & Wishart.

- WOLPERT, D.H. (2001) “Computational Capabilities of Physical Systems”. In Physical Review E (65) : 016128.

- ZIMAN, J. (Ed.) (2000) Technological Innovation as an Evolutionary Process. Cambridge : Cambridge University Press.

- ZUREK, W. H. (1989) “Algorithmic Randomness and Physical Entropy“. In Physical Review A (40)8 : 4731-4751.

List of figures

Fig. 1

The Semiotic Triad as Unit of Physical Information (Semantic Information)

Fig. 2

Demon’s Semiotics

Fig. 3

Micro- and Microstate Transitions in Demon’s Semiosis : A : Efficient Cause, B : Formal Cause, C : Final Cause

Fig. 4

Maximum Entropy and Semiosis

Fig. 5

Techno-Semiosis

Fig. 6

Physical Techno-Semiosis