Abstracts

Abstract

What happens when we mediate? What are the effects of mediation on the body? Are we being altered by technology? This article examines the unique occurrence of cross-model perception found to be associated with moments of acute kinaesonic expression in real-time interactive digital performance. The function and significance of cross-model perception is discussed in the light of the author’s practice with the Bodycoder System and new research into bodily self-consciousness and the sensory effects of new technology within the area of the neurosciences. Empirical findings are framed by a broader theoretical discussion that draws on John Cage’s notion of organicity and Gilles Deleuze’s sensual description of seizure, in opposition to telecommunication’s threat to the morphological stability of reality proposed by Paul Virilio. The article argues that cross-model perceptions, sensory mislocations, compensatory and synaesthesic effects of increasingly intimate and significant couplings of humans and technologies may not, as feared, represent a loss or disruption of perceptual reality, but rather indicate the register of a hitherto unconscious human facility.

Résumé

Qu’arrive-t-il lorsque nous faisons l’intermédiaire, que nous faisons médiation ? Quels sont les effets de la médiation sur le corps ? Sommes-nous modifiés par la technologie ? Cet article examine le cas unique d’une perception au modèle croisé qui s’est révélée être associée à des épisodes d’expression kinésonique aiguë lors de performances numériques interactives en temps réel. La fonction et la signification d’une perception au modèle croisé sont considérées à la lumière de la pratique des deux auteurs (laquelle implique le Bodycoder System) tout comme à celle de recherches récentes sur la perception corporelle de soi et sur les effets sensoriels des nouvelles technologies en neuroscience. Nos découvertes empiriques apparaissent sur le fond d’un débat théorique qui implique autant la notion d’organicité de John Cage que la description sensuelle que fait Gilles Deleuze de la capture, en opposition à la menace posée par les télécommunications envers la stabilité morphologique de la réalité, comme le propose Paul Virilio. Les auteurs soutiennent dans cet article que la perception au modèle croisé, les délocalisations sensorielles, les effets compensatoires et synesthésiques d’une association de plus en plus étroite et signifiante entre l’humain et le technologique ne représentent peut-être pas, tel qu’on le craint souvent, une perte ou un dérangement de la réalité perceptive; ils soulignent plutôt la détection d’une aptitude humaine demeurée jusqu’ici inconsciente.

Article body

A theory of cultural change is impossible without knowledge of the changing sense ratios affected by various externalisations of our senses.

Marshall McLuhan[1]

Introduction

What happens when we mediate? What are the effects of mediation on the body? Are we being altered by technology?

In this data drenched age of information exposure, the deep proliferation of communication technologies and the cyborization of the body, an analysis of the effects of our interactions with new technologies is important if, as McLuhan suggests, we are to begin to understand how such interactions are affecting change in arts practices. Most theorists and art critics take a broadly descriptive or generalised overview when discussing the impact of new technologies on the arts. But it is perhaps within the more intimate realm of the senses, the psychophysical and the neurological, that new technology is having its most significant changes. As artists working with interactive new technology, our focus is not only upon the development of new artistic products and the exploration of the emerging outward aesthetics, but also on the psychophysical effects of our interactions.

In discussing his use of “chance operations” the composer John Cage, an early exponent of electronic music, stated that instead of “self-expression” he felt himself to be involved in “self-alteration”.[2] Through acts of creativity Cage observed his relationship to the raw material of his art in order to identify and negate his own propensity to control, to alter his perception and redefine his role within the creative process. Cage saw this not simply as a method for the creation of artistic products, but as a way of life; creative endeavour as a form of existential meditation and means of self-alteration. For Cage, it was a practice that was as much about moving inside a dynamic creative space as it was about observing himself and altering, through increased awareness, the way in which he operated within it. Cage’s notion of self-alteration is not therefore metaphorical; rather it is a concrete psychophysical effect of engaging in a creative process—a natural consequence of being-in-the-world as an artist—a life-art-process, where the boundaries between life and art are blurred. Fundamental to this approach was the notion of “divorcing oneself from thoughts of intention”[3] and the temptation to take overall control of the making process. Cage was concerned with stripping away conventional aesthetic principles through the use of “chance operations” in order to discover the “ecological” nature of the creative process and to allow its natural “organicity”—the functioning of its innate ecosystem—to generate new forms.[4] Cage saw himself as existing within any given quasi-ecosystem defined as a creative “work” first and foremost as a participant‑one of several participating elements.

While part of our work with interactive technologies is concerned with the utility of configuring or encoding “control”—the polar opposite of Cage’s principle of creative non-intention—our aesthetic goal has been to discover a form of physical interaction with new technology that enables an “organicity” of expression at both the human and computer level. Our technical endeavours have been concerned with developing facility: the performers’ utilitarian and expressive skills and the hardware and computer systems ability to provide broad and multidimensional responsive environments that are accurate, sensitive and complex enough to sustain long-term interest. This drive for facility is fuelled by a belief that increased capability enables a greater and broader palette of interactive sensations and a more intimate and significant coupling of human and technology. An aspirational coupling that Gilles Deleuze described as going beyond the mere vibration of two elements within their own level or zone, abstracted and separate, but a “coupling” that resonates in the manner of “seizure”[5] as “the sensation of the violin and the sensation of the piano in the sonata.”[6] This is not, generally speaking, how we understand our everyday relationship with new technologies. In the everyday we are forced to work with technologies, developed by multinational companies that are designed to meet the general needs of the many not the specific needs of the few. This means that the vast majority of us have no choice in the way new technologies drive our behaviour. Indeed one might go so far as to say that technologies have a tendency to dictate the form and terms of our interactive behaviour. Off-the-shelf technologies[7] are inherently limited in terms of what they will do, and therefore constrain our use of them. Yet, new technology is changing our lives in profound and far reaching ways. Technology allows humans to operate machines and systems at speeds that far exceed human cognition. New technology allows us to multitask, to search and acquire vast amounts of data and information with more agility than we could perform with our physical bodies. Through new technology we can travel virtually, to communicate with sites and people across the globe, to see and hear the world from the comfort of our office and home. On the surface new technology seems to have the power to extend our bodies capabilities. Through our computer terminals we are able to reach out both to the real world and the virtual worlds evolving in cyberspace. New technology seems to promise a utopia of new experiences and sensations. However, theorists such as Paul Virilio suggest that such technology merely recodes human perception as a function of computer processing and does not therefore enhance our perceptual experience. Virilio suggests that rather than extending the body, technology subjugates, reducing the complexities and vagaries of the sensual to the utility of machine code. Further to this he suggests that the ability of new technology to collapse space and time serves only to isolate the human body in the concrete present of real-time and geographic space.[8]

We know from a range of research that the proliferation of communication technology in our everyday lives is producing a range of psychophysical effects. One of the most publicised and commonly experienced is Continuous Partial Attention disorder. CPA is a consequence of what Linda Stone[9] sees as the “always on” culture: a culture in which we are continuously bombarded with information, data and opportunity. Speaking about audiovisual excess Paul Virilio suggests that this “orbital state of siege” we find ourselves in is “temporally circumscribed by the instant interaction of “telecommunications” that confuses near and far, inside and outside and thereby affects the “nature of the building” our intellectual constructs, “the figure of inertia” the human agent and therefore the “morphological stability of reality” itself.[10] However there is a world of difference between the general use of new technologies in the everyday and the specific use of artist-created new technologies. We believe that it is perhaps within the realm of the arts that different relationships can be forged, more intimate couplings created, and more significant effects may be registered.

In this paper we will discuss one aspect of our work with The Bodycoder System: the real-time one-to-one mapping of sound to gesture and the sensory and perceptual consequences associated with this method of interaction. Before doing so, a description of the system is appropriate.

The bodycoder system

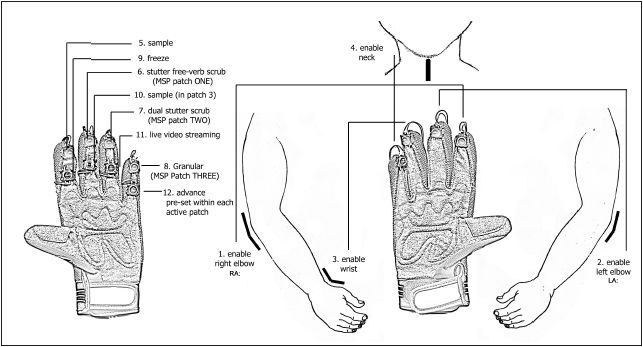

The Bodycoder System, the first generation of which was developed in 1995, comprises of a sensor array designed to be worn on the body of a performer. The sensor array combines up to sixteen channels of switches and movement detection sensors (bend sensors) to provide the performer with decisive and precise control of a dimensional interactive Max Signal Processing environment running on an Apple Macintosh platform (fig. 1). A radio system is employed to convey data generated by the array to the hardware and computer systems. The Bodycoder is a flexible system that can be reconfigured at both the hardware/sensor and software levels according to our creative and aesthetic needs. Generally speaking the sensors placed on the large levers of the body are utilized for more skilled and acute sound manipulations, while less flexible levers are utilized to perform less subtle manipulations. Switches can be assigned a variety of functions from software patch to patch or from preset to preset. Similarly, the expressivity/sensitivity and range of each of the sensors can be changed, pre-determinately from patch to patch. Switches provide the performer with the means of navigation through the various MSP patches and sub-patches, thus allowing the performer to initiate live sampling, to access sound synthesis parameters and to control and move between MSP patches from inside the performance. (Fig. 1)

Figure 1

In one of our pieces, The Suicided Voice (2005-2007), the Bodycoder is used to remotely sample, process and manipulate the live vocals of the performer using a variety of processes within MSP. In this piece the acoustic voice of the performer is “suicided” or given up to digital processing and physical re-embodiment in real-time. Dialogues are created between acoustic and digital voices and gender specific registers are subverted and fractured. Extended vocal techniques make available unusual acoustic resonances that generate rich processing textures that are then immediately controlled on the limbs of the body. (Fig. 2)

Figure 2

The Bodycoder System differs from other data acquisition systems that analyze movement data but do not allow the performer or human agent access to that data. For us the performer’s ability to selectively input and control the data acquired from his or her body, to navigate hardware and software elements, to manipulate, influence progress and work expressively “within” the system is essential if the performer is not to be reduced to the role of “doer”—a mere human agent reduced to machine code. In order to achieve this, the Bodycoder interface includes elements that provide the performer with both expressive and utilitarian functions. Expressive functionality is chiefly associated with the proportional bend sensors located on any of the bend and flex areas of the body and our preferred type of expression is usually kinaesonic.

Kinaesonics

The term “kinaesonic”[11] is derived from the compound of two words: “kinaesthetic” meaning “the movement principles of the body” and “sonic” meaning “sound”. Kinaesonic therefore refers to the one-to-one mapping of sonic effects to bodily movements. In our practice this is primarily executed in real-time.

Simple kinaesonic operations, such as the playing of a small range of notes across the large axis of the arm, are relatively easy. After the position of arm to note/sound has been established, such actions can be executed with minimum physical and aural skill. However, when something like an eight octave range is mapped to the smaller axis of a wrist, the interval between notes/sounds is so small and the physical movement so acute that a high degree of both physical control and aural perception is required to locate specific tones or sonic textures. The absence of consistent visual clues and the lack of tactile feedback further complicate this action.

In the case of composed pieces with pre-configured and consistent sonic responses, kinaesonic skills can be honed through the rehearsal process. However with compositions that are wholly or partially based on the live sampling of sonic material, the slightest change in quality of live input, means that there are always slight differences in sound and in the physical location of the sound mapped to the body. This is made even more acute when working in combination with live processing.

Unlike the interface of a traditional musical instrument the Bodycoder has few fixed protocols and expressive constraints. For example in Hand-to-mouth (2007) the interface, including both switches and bend sensors, is entirely located on the hands of the performer (fig. 3). Indeed within a single composition like The Suicided Voice qualities of kinaesonic expression can change from moment to moment together with the physical location of processing parameters such as pitch, which in movement one of the piece is located at the site of the left elbow and in movement two on the left wrist. So, within a single work, the architecture of the system changes. This means that the performer must adopt an equally flexible approach to working within the System. The ability to multitask across a range of both utilitarian and expressive functions forms a significant part of that flexibility. Shifting expressive qualities and the fluidity of system protocols has a major impact on the performers’ focus and perceptions from moment-to-moment. An ability to multitask across a range of expressive and utilitarian parameters, while working sensitively and precisely through a range of perceptual and performative states, is therefore a particular skill required of the performer. (Fig. 3)

Figure 3

Since the Bodycoders’ inception in 1995, the system has developed in parallel with the performers’ skills. In 2004 both the system and the performer reached what might be described as a plateau of technical capability and performer facility. It was at this point that we began to note the emergence of a range of peculiar sensations and perceptions associated with moments of acute kinaesonic expression.

In 2005 while we were in the final stages of developing The Suicided Voice we noted that at certain moments of acute physical and aural focus that required difficult finite control, a sensation of feeling sound at the site of physical manipulation was registered. Specifically, this occurred within a sequence of fast live sampling and real-time control on the right elbow lever. The sensation, very much like a resistance, might be described as similar to stroking ones’ arm across an uneven sandpaper texture—the texture being perceived as both inside and on the surface of the epidermis. The sensation offered a grainy resistance that correlated to the textural features of the sound being manipulated. Could this peculiar sensation be described as a hallucination? According to The Diagnostic and Statistical Manual of Mental Disorders compiled by the American Psychiatric Association, hallucination is defined as “a sensory perception that has the compelling sense of reality of a true perception but that occurs without external stimulation of the relevant sensory organs.”[12] In the case of the Bodycoder System, the sensation of “feeling sound” involves both tactile (motion orientated) and auditory stimulation; the resulting sensation cannot therefore be easily described as hallucinatory. The perception of the audible becoming tangible and tactile is therefore more correctly described as “cross-modal” and synaethesic in nature.

Cross-modal perception

It is true to say that no sense organ or sense perception functions in isolation. To see is not simply an optical operation, otherwise sight would be nothing more than the registering of light without depth or definition. To see involves “our own body’s potential to move between the objects (seen) or to touch them in succession... we are using our eyes as proprioceptors and feelers.”[13] Equally, “to hear” is not simply a matter of aural reception, but involves a similar form of proprioception that includes a projective sense of movement toward a sound subject that allows us to intuit distance and direction. A sense of touch—vibration to auditory nerve—is also required to elicit velocity, pitch and texture. Most of the time, we are unaware of the relational calculations the brain is undertaking. Such calculations are, in general, prereflective. The Bodycoder System does not provide the performer with any form of visual or tactile feedback, as is the convention with most acoustic instruments. Sensors are not themselves felt as tactile objects on the body and while it is true to say that the body feels itself moving, the sensation of kinesis is much less defined than the sensation of touch. If we therefore hypothesise that the sensation of feeling sound experienced at moments of interactive operation with the Bodycoder System is a sense reflex associated with normal, yet prereflective cross-modal functioning of the brain, then why is it being registered as a concrete perception at moments of acute kinaesonic interaction? Our initial thoughts were that this might have something to do with working in real-time.

It is common, at the beginning of the making and development process of a new piece, for the performer to feel frustrated and overwhelmed by the system, particularly by the various real-time response speeds. Although real-time response speeds do alter according to the computer’s processing speed for any given processing parameter, and of course response speeds can be “tweaked” in the programming, we tend to favour the most immediate response. Generally speaking, from inside the Bodycoder System, the sensing of the computer response speeds from moment-to-moment within a piece are always acutely felt, since the tempo of the real-time dialogic interaction between computer and performer is very much metered by response times. Getting used to the varying tempi of response is something that only long periods of rehearsal can alleviate. Much has been written about the “action feedback loop” of human computer interaction. It is well known that computers can respond at speeds that far exceed human cognition. It is very disconcerting in certain moments of a performance to feel the computer’s response times exceed the speed of one’s own cognition. At such moments one feels a real frisson of crisis. It is the sense of stepping off a pavement and nearly getting run over by a car because the car was moving too quickly to register its approach at the point of stepping onto the road. It is a near-miss experience that triggers very strong sensations and physical reactions including sweating, flushing and an increase in heart rate. It is the sense of being right on the edge of one’s abilities to control and maintain one’s position within the dialogue. So is the peculiar sensation of “feeling sound” a psychophysical reaction to acute response speeds? Certainly, it could be a contributing factor along with the geographic disassociation of sound from its perceived point of manipulation.

Like most electro-acoustic sound orientated systems, sound is diffused across a speaker array that can be situated metres away from the performers’ body. So, the point of manipulation—of kinaesonic interaction—is not the point of sound diffusion. From an audience point of view this does not seem to degrade their understanding or enjoyment of the performance since most people accept as an aesthetic norm the use and function of speaker arrays and their connection to the operations of a performer or instrumentalist. For musicians who play acoustic instruments, sound is always produced at the point of its manufacture—it emanates from a visible, tactile instrument. One of the reasons why we define the Bodycoder as a “system” and not as an “instrument” in the classical sense is because it is not an object (in the sense of a piano or a violin), it cannot easily be seen in its entirety, it is not tactile (it has no keyboard/fretboard, etc.) and it does not produce sound from the point or physical site of human/instrument interaction. While we tend to use close monitoring—foldback speakers that provide the performer with audio feedback—these are still located a short distance away from the performers’ body. In the case of more physical full-body pieces such as Lifting Bodies (2000)[14] and Spiral Fiction (2003)[15] essentially dance pieces‑monitors may be located beyond the performance space. The dislocation of sound from its perceived point of kinaesonic execution and manipulation adds another degree of difficulty for the performer. Even though the intellect can rationalise the concept of dislocation, the sensual body still has to find ways of working with dislocation. The fact that at points of acute kinaesonic interaction—at moments of high difficulty—the body seems to acquire the sensation of tacticity, suggests that the brain is trying to “compensate” or “facilitate” interaction by providing its own kind of feedback, in effect closing the gap between site and dislocated sound source. It creates a sensation of “proximity” by recreating the proximal sensation of a texture—a tacticity in the arm, based on what the mind/body knows about movement and touch from other familiar patterns of proprioception. This suggests that what lies within the prereflective is a reservoir of sensate resources‑small algorithms for experience—proprioception patterns that can be re-configured and used to help orientate the body. This might seem like an outlandish claim, but we have all experienced similar sensations in milder forms. Witness the sensation of resistance when playing Wii Tennis, or similar so called “immersive” games, when clearly neither the software nor the hardware are configured for that kind of feedback. We have all felt nausea brought on by the sensation of speed and velocity generated by certain VR rides, the physical movement of which does not correlate with the perception of extreme movement we think we are experiencing. Is this merely a case of the brain being fooled or is there something else at work here?

Within the area of neuroscience it has long been established that if conflicting information is present across different sensory modalities, then our perception of events may be degraded or altered in ways that reflect a synthesis of the different sensory clues. One example of this is the McGurk effect, here described by Sophie Donnadieu in Analysis, Synthesis and the Perception of Musical Sound:

If the auditory syllable is a /ba/ and if the subjects see a video tape of a speaker producing a /ga/, they report having heard a /da/. This /da/ syllable is an intermediate syllable, the place of articulation of which is between those of /ba/ and /ga/. This clearly shows that visual and auditory information can be integrated by subjects, the response being a compromise between normal responses to two opposing stimuli.[16]

What is interesting here is the notion that a conflict between auditory and visual stimuli can result in a “third” compensatory perception. Although this does not explain the phenomena of tacticity experienced with the Bodycoder System, it does provide evidence that such perceptual phenomena are observed to occur as a result of different sensory combinations. Most recently the work of Lenggenhager, Tadi, Metzinger and Blanke[17] into bodily self-consciousness has contributed to discourse concerning the sensory effects of new technology, in this case Virtual Reality representations, on the experience of a human agent. In their 2007 paper Lenggenhager, Tadi, Metzinger and Blanke describe how they designed an experiment that used conflicting visual-somatosensory input in virtual reality to disrupt the spatial unity between the self and the body. They found that “during multisensory conflict, participants felt as if a virtual body seen in front of them was their own body and mislocalized themselves toward the virtual body, to a position outside their bodily borders.”[18] The experiment, that used both the visual and tactile stimulations of the subject, showed that “ humans systematically experience a virtual body as if it were their own when visually presented in their anterior extra-personal space and stroked synchronously.”[19] This “misattribution” of feeling, which is often referred to as “proprioceptive drift” has also been recreated by more “analogue” means by Ehrsson, Spence and Passingham to test feelings of “ownership” for a rubber hand:

We used functional Magnetic Resonance Imaging (fMRI) to investigate the brain mechanisms of the feeling of ownership of seen body parts. We manipulated ownership by making use of a perceptual illusion: the rubber hand illusion. During the experiment, the subject’s real hand is hidden out of view (under a table) while a realistic life-sized rubber hand is placed in front of the subject. The experimenter uses two small paintbrushes to stroke the rubber hand and the subject’s hidden hand synchronizing the timing of the brushing as closely as possible. After a short period, the majority of subjects have the experience that the rubber hand is their own hand and that the rubber hand senses the touch.[20]

What is interesting about all of these examples is the necessity for “synchronous” one-to-one real-time sensory stimulation to instigate the sensation of “mislocation”, auditory “compensation” and “ownership”. On the basis of this evidence we can perhaps hypothesise that the real-time interaction of the Bodycoder System, coupled with kinetic and auditory sense stimulation, perhaps problematised by sound dislocation and action-response speeds, stimulates the sensation of “feeling sound” Moreover, like the McGurk effect, the tactile sensation of sound at the point of kinaesonic operation could perhaps be said to be a “compensatory” or “third” perception that is perhaps akin to an intermediary /da/.

Conclusion

Merleau-Ponty states “if we were to make completely explicit the architectonics of the human body, its ontological framework, and how it sees itself and hears itself, we would see that the structure of its mute world is such that all the possibilities of language are already given in it.”[21] Some of these languages may yet be unfamiliar, their awakening reliant on particular kinds of stimulation from the external world. We know that our environment is changing—it is being populated by new and interesting technologies. Technologies that have the power to turn us inside-out, make our inner worlds visible and audible and allow our imaginations to fly from the body. Equally, the body seems to have the power to reach out to project a sensation of itself on virtual objects such as a rubber hand and an avatar inside a VR environment. Merleau-Ponty hypothesised that such sensual perceptions and potentials, many of which are described in this paper, are already “innate” within the hitherto “mute” realm of the pre-reflective. Equally it could be proposed that they are the effects of the new relationships the body is forging in relation to a world saturated with new technology. To seek to further articulate and refine such unique sensual registers through art practice may be self-altering. Whether innate or emergent, such sensual registers represent a potentially new language, the “organicity” of which is yet to be fully explored.

We believe that our work with the Bodycoder System demonstrates that the mind and body can re-configure its sense ratios when prompted by technology and crucially that it can find the resources within itself to fully participate in a sensual dialogue with new technology. We believe that the kind of synesthetic perception that is becoming an integral part of our work with the Bodycoder System does not alienate the body as Paul Virilio has argued. It does affect intellectual constructs, human agency, and it does disrupt the “stability of reality”[22] if by “real” we mean only normal sense to sensation experience and not those peculiar “cross-modal” sensations occasioned by conflicting sensory information presented across different sensory modalities.

In his seminal book Parables for the Virtual, Brian Massumi states, “synesthetic forms are dynamic. They are not mirrored in thought; they are literal perceptions.”[23] There is now a great deal of research emerging out of the area of neurology that upholds this hypothesis, but we are yet to understand its purpose. There is no doubt that in terms of our work with the Bodycoder System, the sensation of feeling sound in an area of the body where acute kinaesonic expression takes place, provides the performer with a sensate register that enhances control and interaction. It intervenes at moments when control becomes difficult and could therefore be said to assist interactive operations.

We would suggest that our work with the Bodycoder System contributes to the evidence of the way in which the mind/body is making available new registers that have the potential to facilitate closer and more skilled articulations between humans and computer/digital environments. Such registers may, at some point in the future, be found to constitute a new sensate “organicity” that shapes and informs more sophisticated human-technology articulations and interactions. That we can even consider looking beyond conventional aesthetic forms to consider the possibility of the existence of new creative dynamics that lay outside the purely intellectual realm is due in large part to the work of John Cage and artists such as Jackson Pollock, William Burroughs and other pioneers of instinct and chance. In 1967 Marshall McLuhan proposed that the next medium “may be the medium of consciousness”[24] itself. Far from being non-intentional, the new “organicity” of the medium may arise out of the intentionality of “control” and “real-time” interaction. It may be characterised by cross-modal perceptions similar to the sensation of “feeling sound” described in this paper and other sensations arising out of the work in areas of neurology. The new organicity of “consciousness as medium” and the new operations and aesthetics made available through the medium may arise out of an intimate state of “seizure”—the sense and sensation of the coupling of humans and technologies.

Appendices

Notices biobibliographiques / Biobibliographical Notes

Julie Wilson-Bokowiec est auteur et performeur. Elle a aussi assuré la mise en scène et la création d’oeuvres dramatiques, musicales (opéra) et théâtrales. Elle est présentement chercheuse invitée à la University of Huddersfield.

Mark Bokowiec est compositeur. Il dirige les studios de musique acoustique ainsi que le tout nouveau Spacialization and Interactive Research Lab à la University of Huddersfield. Il donne également des cours sur la performance interactive, la composition et la conception d’interfaces et de systèmes.

Notes

-

[1]

Marshall McLuhan, The Gutenberg Galaxy: The Making of Typographic Man, London, Routledge and Kegan Paul, 1962, p. 42.

-

[2]

John Cage and Joan Retallack, Musicage: Cage Muses on Words, Art, Music, Hanover, Wesleyan University Press and University Press of New England, 1996, p. 139.

-

[3]

Richard Kostelanetz, Conversing with Cage, New York and London, Routledge, 2003, p. 232.

-

[4]

Perhaps the most striking and famous example of this is Cage’s 4’ 33” (in three movements) (1952).

-

[5]

The sense and sensation of being seized by each other: of being possessed or in possession of each other.

-

[6]

Gilles Deleuze, Francis Bacon: the Logic of Sensation, trans. Daniel W. Smith, London and New York, Continuum, 2003, p. 67-68.

-

[7]

Including software and Internet sites designed for mass use and consumption.

-

[8]

Paul Virilio, Open Sky, trans. Julie Rose, London and New York, Verso, 1997, p. 74.

-

[9]

Linda Stone is a former Vice President of Microsoft.

-

[10]

Paul Virilio, Polar Inertia, trans. Patrick Camiller, London, Thousand Oaks and New Delhi, SAGE Publications, 2000, p. 30.

-

[11]

For a full description of this, see Julie Wilson-Bokowiec and Mark Bokowiec, “Kinaesonics: The Intertwining Relationship of Body and Sound,” Contemporary Music Review. Special Issue: Bodily Instruments and Instrumental Bodies, London, Routledge Taylor and Francis Group, vol. 25, n° 1-2, 2006, p. 47-58.

-

[12]

The Diagnostic and Statistical Manual of Mental Disorders, American Psychiatric Association, 4th Edition, 1994, p. 767.

-

[13]

Brian Massumi, “Sensing the Virtual, Building the Insensible” in Stephen Perrella (ed.), Hypersurface Architecture, special issue of Architectural Design (Profile n° 133), vol. 68, n° 5-6, May-June 1998, p. 16-24.

-

[14]

Julie Wilson-Bokowiec and Mark Bokowiec, “Lifting Bodies: Interactive Dance—Finding new Methodologies in the Motifs Prompted by new Technology—a Critique and Progress Report with Particular Reference to the Bodycoder System,” Organised Sound, Cambridge, Cambridge University Press, vol. 5, n° 1, 2000, p. 9-16.

-

[15]

Julie Wilson-Bokowiec and Mark Bokowiec, “Spiral Fiction,” Organised Sound, Special Issue: Interactive Performance. In association with the International Computer Music Association, Cambridge, Cambridge University Press, vol. 8, n° 3, 2003, p. 279-287.

-

[16]

Sophie Donnadieu, “Mental Representation of the Timbre of Complex Sounds,” in James W. Beauchamp (ed.), Analysis, Synthesis, and Perception of Musical Sounds: The Sound of Music, New York, Springer, 2007, p. 305.

-

[17]

Bigna Lenggenhager, Tej Tadi, Thomas Metzinger and Olaf Blanke, “Video Ergo Sum: Manipulating Bodily Self-Consciousness,” SCIENCE, vol. 317, 24th August 2007.

-

[18]

Bigna Lenggenhager, Tej Tadi, Thomas Metzinger and Olaf Blanke, “Video Ergo Sum: Manipulating Bodily Self-Consciousness,” p. 1096.

-

[19]

Bigna Lenggenhager, Tej Tadi, Thomas Metzinger and Olaf Blanke, “Video Ergo Sum: Manipulating Bodily Self-Consciousness,” p. 1098.

-

[20]

Henrick Ehrsson, Charles Spence and Richard E. Passingham, “That’s My Hand! Activity in Premotor Cortex Reflects Feeling of Ownership of a Limb,” SCIENCE, vol. 305, 6th August, 2004, p. 875.

-

[21]

Maurice Merleau-Ponty, The Visible and the Invisible, trans. Alphonso F. Lingis, Evanston, Northwestern Univerity Press, 1968, p. 155.

-

[22]

Paul Virilio, Polar Inertia, p. 30.

-

[23]

Brian Massumi, Parables for the Virtual: Movement, Affect, Sensation, Durham and London, Duke University Press, 2002, p. 186.

-

[24]

Eric McLuhan and Frank Zingrone (ed.), The Essential McLuhan, London, Routledge, 1995, p. 296.

List of figures

Figure 1

Figure 2

Figure 3