Abstracts

Abstract

The author of this article outlines the development of a scoring rubric to grade scientific translations. The article begins by enumerating the assumptions that shaped his teaching initially, before demonstrating how classroom observations eventually led the author to understand that his assumptions were faulty. The experience leads to a deeper understanding of student competencies, which are used to create an instrument that helps to describe student achievement and assign it an actual grade. The author argues that the rubric plays a part in overall student learning, and he describes the development of the rubric within the context of demographic changes taking place in North American universities.

Keywords/Mots-Clés:

- translation pedagogy,

- scientific translation,

- evaluation and measurement

Résumé

Dans cet article, l’auteur décrit l’élaboration d’une grille d’évaluation pouvant servir à attribuer une note à des traductions scientifiques. Pour commencer, il énumère les présuppositions ayant orienté sa première expérience d’enseignement dans ce domaine. Ensuite, il présente des observations faites en salle de classe qui l’ont mené à remettre ses présuppositions en doute et il construit une grille d’évaluation. Cette dernière sert à décrire les niveaux de rendement des étudiants et à calculer les notes qui leur seront attribuées. La grille s’emploie également comme partie intégrale de l’apprentissage global des étudiants. Pour terminer, il trace le lien entre son cheminement comme professeur et les changements démographiques touchant les campus universitiares en Amérique du Nord.

Article body

1. Introduction

How do new teachers learn? How do they develop expertise? In the case of teachers of translation, graduate studies and professional experience doubtless equip them with the content knowledge needed to teach, but the classroom still presents neophytes with ample challenges. The most nerve-wracking of these is arguably grading student work. It is easy for teachers to fall into the trap of using their professional sense of the quality demanded in the translation marketplace to intuitively assign a grade. A teacher’s intuition may be right, and the grade may give an accurate indication of the distance students have yet to travel before they are able to produce market-ready translations. However, if the grading process is not transparent – that is, if the space between the teacher’s first reading of the student work and the eventual arrival at a grade is a black box whose inner workings the students cannot see or understand – then students are likely to become frustrated and to miss out on an opportunity to learn.

Yet because of demographics, the intuitive grade could become a very commonplace occurrence. Major population shifts are affecting university campuses across North America. In short, class sizes are growing, and the number of experienced professors is shrinking. Enrolment is on the rise, both because of widespread demographic phenomena, like the coming of age of the echo generation (Spencer 2001; Foot 1998, 2001), and because of more localized phenomena, like the on-campus arrival of Ontario’s double cohort[1]. At the same time, the average age of university faculty continues to rise. The majority of North American university professors are now approaching retirement (Spencer 2001), and it is estimated that thousands of new faculty will have to be hired to replace their older colleagues and to accommodate the echo generation (Foot 2003).

What this information suggests is that there are, and will continue to be, growing numbers of new teachers in translation programs across North America. It is therefore important to develop a clear understanding of the ways in which new teachers can complement their subject-matter knowledge with pedagogical know-how. This article investigates the development of such know-how, by mapping out an alternative to the intuitive grade. It shows how new teachers can use their own first experiences in the classroom to develop a grading tool that is transparent and that can be incorporated into classroom learning in a holistic way.

To do this, the article outlines my own learning curve as the teacher of a course on scientific translation. It begins by explaining the assumptions that initially guided my teaching, before describing the surprises that led me to question these assumptions and construct a more tailored scoring rubric to grade student work. Finally, it draws some conclusions about the use of scoring rubrics to promote student learning in a more general way. The development of a tool for grading is presented in a narrative fashion, because the objective here is to open up the black box and become more aware of the sometimes unconscious thoughts that guide intuitive grading.

2. Initial Assumptions

The course I taught on scientific translation was designed around the competencies that students would need to perform well. Competencies are the underlying characteristics that indicate a given person’s way of behaving, thinking, or generalizing across situations (Spencer and Spencer 1993). They are most often studied in educational and professional settings as a basis for measuring achievement. In this case, the goal was to determine what knowledge and skills[2] the students would need to translate scientific texts. I realized that I held two assumptions about student ability: the first concerned the competencies that I believed students would already have in place, while the second concerned the competencies I felt students would need to develop.

The specialized translation course was offered to students in their third year of university study, which was also their second year in a translation program. As a result, my assumption was that students would already be able to demonstrate a certain level of achievement in the competencies required to translate general texts. Specifically, I expected that students would be able to

Write grammatical sentences;

Structure the flow of information within paragraphs; and

Shape their writing into suitable text types for a given setting and a given audience.

It was also my belief that students could do this while resisting the influence of source-language structures. In other words, I thought that the students would be able to write idiomatically and clearly at the sentence, paragraph, and text levels. After all, they had had to demonstrate these abilities in the general translation courses they had taken previously. There would, of course, be a range of achievement levels in each of the three competencies across a group of students, but it did not seem reasonable to assume that I would have to focus much attention on these areas in my teaching.

On the other hand, it did seem reasonable to expect that students would have difficulty with features that are characteristic of scientific writing. Specifically, I anticipated that students would need to develop the competencies to

Find and use scientific terminology; and

Understand and write about scientific concepts.

Therefore, the plan was to help the students examine terminology in specialized lexicons and databases, and in parallel English-language texts. Similarly, my intention was to teach students to tease out building-block concepts when they were first approaching a new content area, and to use these concepts to increase their understanding. I reasoned that since the students had not encountered specialized terminology and concepts in previous translation courses, they would need to concentrate their attention on them. Consequently, developing the competencies to deal with terminology and concepts would also have to be the focus of my teaching.

3. Surprises along the Way

Given these initial assumptions, my classroom experience was surprising in a number of ways. In the pages that follow, these surprises are described, and this description is broken down into five categories: the use of terminology, the manipulation of concepts, sentence-level writing, paragraph-level writing, and text-level writing.

3.1 The Use of Terminology

Before the course began, I imagined my students’ lexical needs in terms of the distinction between language for general purposes (the language of day-to-day communication), and language for specific purposes (the language of experts communicating within their area of expertise) (Bergenholtz and Tarp 1995: 17). I reasoned that the students would have developed a level of ability to recognize and use general lexical items in the source and target languages. Before taking the course, the students had had a year of translation study during which they had used general dictionaries, both unilingual and bilingual. In addition, they had read a large number of source-language texts on general topics, and they had consulted parallel target-language texts on general topics. Conversely, the expectation was that students would need to learn to use specialized lexical items. This was because the students had not studied terminology, nor used specialized dictionaries, nor yet translated specialized texts.

What I discovered was surprising. The vast majority of the terms that students encountered in the original French texts appeared to be direct cognates of English terms. In many cases, students were able to locate a previously unseen lexical item in the source text and simply guess at the corresponding English forms. Figure 1 contains examples of this kind of term. The examples are taken from two units covered in the course; the first was on drug trials for medications used in Highly Active Anti-Retroviral Therapy (HAART); and the second was on the neuropsychology of bilingualism.

Figure 1

Examples of Cognate Terms

What these examples suggest is that students actually had to do very little work to find accurate target-language terminology. It is true that in some instances, there was no obvious correspondence between source- and target-language terms. Some of the specific terms of this type that students encountered in the course are listed below in Figure 2.

Figure 2

Examples of Non-Cognate Terms

However, in these cases, students were more often then not able to use one of three strategies to locate a needed equivalent within a short period of time. First, they could use their knowledge of the Greek and Latin roots so common in scientific terminology to suggest a target-language term (e.g., “hémato-méningée” to “blood-brain”). Second, they could consult an on-line terminological database. All the students made use of the Internet to do their translations, and they had access to term banks like the Grand dictionnaire terminologique through the Office de la langue française. Third, the students could make use of a parallel text. It was easy for them to find English-language journal articles that were equivalent to the French ones they were translating – as university students, they had access to electronic periodical indices and a host of online scholarly journals.

Together, what these two sets of examples might indicate is that the students generally had little difficulty with lexical items in their translation work. Students appeared to be able to find accurate terminology for their translations either through quick online searches or through guesswork. However, this conclusion is not entirely accurate. On several occasions, the students were asked to analyze a source text in class, and as a first step in this analysis, they were encouraged to identify source-language terms that would require research. In other words, they would be given a French text they had not seen before, and they would be asked to underline or highlight “technical terms” that they would need to look up when they had access to paper or online resources. A short list of some of the lexical items they selected is contained in Figure 3.

Figure 3

Examples of Student-Identified “Terms”

What is clear here is that the students had in fact not picked out technical terms, but rather that they had identified lexical items that are part of language for general purposes. In other words, what began as a search for terminology had in fact revealed gaps in the students’ knowledge of everyday language. Granted, that everyday language is the type needed to read at a university level in French, but it is in no way related specifically to the expression of scientific content.

3.2 The Manipulation of Concepts

In the original planning for the course, I had intended to spend a significant amount of time teaching students how to approach a new field of study by identifying and learning certain base concepts. This approach was based on both theory and practice. A number of researchers who have examined the translation or interpreting process underline the importance of building a cognitive framework to facilitate learning: Lederer (1981) argues that knowledge of the immediate “situation” (“contexte cognitif”) and prior world knowledge (“bagage cognitif”) are essential for understanding, and her claims are somewhat reflected in Dancette’s (1997) discussion of the textual level and the notional level in translation. What this suggests is that translation ability is in large part dependent on an understanding of realities beyond the text. Thinking back to my own work with scientific translation, I could see that this was true. I recalled several assignments that required me to do external research in order to understand the seminal ideas in the text I had to translate.

Believing the establishment of base concepts to be an important part of scientific translation, I began each unit of the course with an assignment that required students to do some light background reading and to answer a short series of questions. In the following class, students had the opportunity to review the answers to the questions and to understand their importance in the context of their translation work. For example, at the beginning of a unit on HAART, students read about the replication cycle of HIV in the human body, and about the ways in which the three main classes of drugs – Protease Inhibitors (PIs), Nucleoside Reverse Transcriptase Inhibitors (NRTIs), and Non-Nucleoside Reverse Transcriptase Inhibitors (NNRTIs) – disrupt the replication cycle. They also learned about the two main tests (viral load and CD4 count) that are performed on seropositive patients.

I had expected to use introductory lessons like these to build up to the more complex concepts that we found in the texts. However, the students soon demonstrated that it wasn’t necessary to spend a great deal of time looking at scientific concepts, because they started making connections on their own. For example, the students very quickly understood that most of the reports on clinical trials that they were translating were trying to compare the effectiveness of different combinations of the classes of drugs[3]. They also understood that “effectiveness” was often evaluated in terms of viral load and CD4 counts, the two measures they had discussed at the beginning of the unit. In other words, once the students were introduced to a few “building blocks,” they were generally able to do the remaining work on their own to understand scientific concepts in the text.

However, this finding should not suggest that students had no difficulty with the concepts presented in the texts they worked on. In fact, there were numerous instances where students had difficulty understanding what was going on in the text, and for non-linguistic reasons. Two such instances are outlined in Figure 4 below.

Figure 4

Student Translation Showing Difficulty with General Concepts

In the first example, Viard (2003) explains that the article he is reviewing is a small study that was part of the larger Novavir trial. In the translation, however, the student mistakenly conflates the two prepositional phrases (“d’une sous-étude” and “de l’essai Novavir”) and indicates that Novavir is the name of the substudy. In the second example, taken from a French newsletter for people with HIV, the author explains that AZT used to be used as a treatment on its own, but that it is now rarely used as a single medication. Instead, it is more commonly prescribed with other drugs to form a “cocktail.” The student translation wrongly interprets the adverbial construction (“le plus souvent utilisé”) as a nominal one (“le molécule le plus souvent utilisé”), suggesting that AZT is the most frequently used drug. This faulty interpretation is particularly interesting, because discussion in class repeatedly emphasized the history of the treatment of HIV, and the move from monotherapy to cocktail therapy.

What is noteworthy in these two examples, is that the students’ difficulties cannot be explained by a lack of specialized information: it does not require scientific knowledge to understand the fact that a large study has a subcomponent, nor that there is a difference between “most used” and “most often used.” Similarly, it seems unlikely that student performance can be explained by a lack of linguistic knowledge. All the students in the class know enough French to understand “souvent” and “sous-étude.” Instead, the examples above could be explained by noting that students appeared to have occasional difficulties with general concepts in the texts they translated.

3.3 Sentence-Level Writing

The previous sections of this article discuss the students’ ability to use terminology and manipulate concepts. The sections that follow provide a closer examination of the capacity to write, beginning at the sentence level.

In my initial thinking about the students’ competencies, I believed that they would have developed the skill to write correct and effective sentences. All the students had taken a number of general translation courses, and it seemed to make sense that these courses would have strengthened their knowledge of grammar, punctuation, and spelling.

As the students produced more work in class, it appeared that my assumption about sentence-level writing was correct. To be sure, there was a range of ability in the classroom. Some students’ work was fraught with spelling mistakes, punctuation errors, and grammatical slip-ups, while the work of others was largely devoid of such problems. In addition, students weren’t always familiar with the more complex aspects of sentence writing. Students knew the rules for using a comma, but not those for using a semi-colon. But overall, students had knowledge of grammar, punctuation and spelling, and it was possible to deal with deficiencies in their knowledge without sacrificing a lot of class time[4].

3.4 Paragraph-Level Writing

The next surprising discovery came out of what might be termed the discursive features of student work – features that are divided here into paragraph-level (Section 3.4) and text-level writing (Section 3.5). Both kinds of writing hinge on students’ ability to organize their texts into structures larger than the sentence.

The first such structure was the paragraph. There are a number of ways to structure writing at the paragraph level, but the discussion in this section will deal with one particular strategy – the use of the topic and stress positions from sentence to sentence to create a cohesive whole. Gopen and Swan (1990) point out that, in English, when effective writers want to draw readers’ attention to new information, they place it in the stress position, which is located at the end of a syntactic unit. Meanwhile, the beginning of the unit – the topic position – is reserved for information that either provides the necessary context for readers to understand what falls in the stress position, or that provides a link to information in previous units.

When writers use the two positions in this way, their strategy has two important effects on structure. First, within the syntactic unit, the reader is guided along smoothly. This is because the beginning of the unit contains ideas that are already stated, implied, or familiar, while the end of the unit expresses ideas that are hard to predict, unfamiliar, or significant (Williams 1981). In other words, as readers move from beginning to end of the unit, they transition smoothly from known to unknown. Second, across syntactic units, there is a high degree of cohesion. What was unfamiliar and placed in the stress position of one unit becomes familiar and is placed in the topic position of the next. As ideas are introduced and expanded upon, the writer creates references backwards and forwards throughout the paragraph as a whole.

Many writers learn to create paragraph structure through the topic and stress positions somewhat intuitively, but this did not appear to be the case with the students in the scientific translation class. To illustrate student difficulty with paragraph structure, an excerpt from a student translation is provided below in Figure 5. The source text (Vincent 1999) is a popularization of science that describes a study conducted by a research team in Japan. The title of the article establishes the topic as the neuropsychology of sign language, and the first paragraph of the text (not included below) introduces the reader to the Japanese team. The second paragraph, which appears below, presents the reader with the instrument used by the team.

Figure 5

Student Translation Showing Difficulty with Paragraph Structure

In the French text, familiar information does appear at the beginning of the first sentence – both the study and the researchers are mentioned from the start. Unfamiliar information is set up very carefully; the writer first explains a new technology in general terms (“l’un des plus puissants outils d’exploration”), before naming it for the readers (“la tomographie par émission de positrons”). The English translation reverses the order of information, presenting an unknown idea first and then linking it to an idea readers have already seen.

As the French paragraph continues, the movement from known to unknown is preserved. Mention of “la technique” refers back to “TEP,” and the syntactic unit closes with new information about a “surconsommation.” In the final clause beginning with “donc,” an ellipsis obscures an easy-to-reconstitute subject and verb (il s’agit de), and readers are left with a new way to understand the effect of the increased consumption (“débit sanguin accru”). Yet the English version once again upsets the expected structure. The last two sentences both begin with unpredictable information (“the fact” and “blood flow”), and they end with references to ideas already presented (“PET technique” and “this consumption”).

In English, the order of information produces a paragraph that is abrupt, difficult to read, and lacking in cohesion. Further, this example was not an isolated one. Instead, it was representative of student work in the course on the whole, a finding that caught me off guard. The need for effective paragraph structure is not unique to scientific writing, and I had expected that the ability to write at the paragraph level would be a competency that students would bring with them into the course. However, evidence from student work suggested this expectation was wrong. Indeed, students seemed to feel the need to change the structure of a piece of writing, oblivious to the fact that in doing so they had violated that structure. It was as if somehow the act of moving the pieces around would create the false but hopeful impression in the reader that the students had mastered the text by handling it.

This section has presented one piece of evidence – the use the topic and stress positions for given and new information respectively – but these are not the only features that help to create structure at the paragraph level. For example, Gopen and Swan (1990) mention the minimization of subject-verb distance, and the inclusion of phrases and transition words as introductory elements. When there is great distance between subjects and verbs in sentences throughout a paragraph, the reader faces an additional cognitive burden and has difficulty understanding how information in the paragraph is inter-related. Similarly, when phrases and transitions are not present to provide context and to link with preceding information, the reader has to work harder to follow the flow of ideas. Examples of these features are not provided here because of space constraints, but they also posed a problem for students in the course.

3.5 Text-Level Writing

Another discursive feature that was surprisingly problematic was the structure of the text itself. There are instances in writing when meaning is derived not from linguistic units at the sentence level, but rather from the text as a whole and from the argument it expresses in its situation of use. In other words, readers’ understanding depends in part on their ability to see the overall argumentative shape of a piece of writing, and to recognize how that shape fits in with other knowledge that they already possess.

To illustrate text-level writing, one of its particular manifestations is discussed here: the interpretation of inference. The use of inference is described by Setton (1999) in his discussion of both the comprehension and production sides of the translational process[5]. Setton’s account suggests that to understand source-language material, translators rely on their ability to compare linguistic content against the pragmatic intent of the source-language producer. At times when intent and content do not match up, when the producer makes an inference, translators must make an interpretation in order to understand. When it is the translators’ turn to produce target-language material, they likewise compare the language forms they are using against their understanding of their addressee. They then decide whether to include, exclude, highlight, or tone down source-language material in the target-language production.

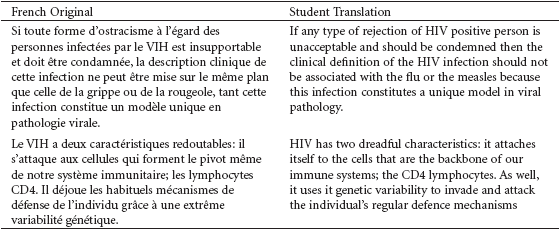

Again, an excerpt from a student translation will illustrate the interpretation of inference more clearly. In Figure 6, the source text is another popularization of science that describes the HIV infection process. The paragraphs presented here are the first and second in the article; the first offers a very general introduction to the topic, while the second begins the actual discussion of infection.

Figure 6

Student Translation Showing Difficulty with Interpretation of Inference

The first paragraph of the French text offers an interesting challenge. The paragraph sets up a contrast, a fact that is tipped off by the very first word. “Si,” in this instance, is being used not to introduce a condition, but rather a concession. In other words, the intention behind the author’s use of the work is not to discuss some hypothetical situation. Instead, it is as though he wishes to say, “I will concede to you that X is true, but I also want to emphasize Y”[6]. In addition, the juxtaposition of X and Y implies a contrast between the two.

If we look more closely at the French text, we can see that this contrast is complex. On the macro level, the author is contrasting diseases with the people who suffer from them. The macro contrast is broken down into four components on the micro level: 1) HIV; 2) other diseases; 3) people with HIV; and 4) people with other diseases. Components 1 and 2 are contrasted, while components 3 and 4 are compared. The structure of this argument is depicted in Figure 7 below.

Figure 7 Representation of Argument Structure in Michel (1995)

The difficulty in this argument structure is that not all of its components are explicitly stated in the text. Indeed, the reference to people with other diseases is implicit. However, readers are able to interpret the inference by making use of their outside knowledge. For example, they may recall some of the language used to talk about people with HIV at the end of the 1980s. At that time, it was not uncommon to describe children infected in the womb and recipients of blood transfusions as the “innocent victims of AIDS”[7], which in turn implied that there must also be “guilty victims.” To make a similar distinction with regard to other diseases (“the innocent victims of influenza,” “the guilty victims of the measles”) would seem silly. This realization is the basis for the author’s argument.

Yet what is interesting in the English translation is that the larger structures go unaddressed. The French “si” is translated in a knee-jerk manner as “if,” the argument structure with its complex contrast is completely indecipherable, and no extra-textual inference has been made. In class, when these three problems were pointed out to the students, they were able to come up with a more satisfying translation for the first paragraph:

It is important to speak out against the stigmatization of people living with HIV, and to treat them just like the sufferers of any other disease. However, HIV itself cannot be put in the same class with illnesses such as influenza and measles, because its pathology is unique among viruses.

What this entire exercise suggests is that, once again, the initial assumptions about student ability were wrong. I had believed that students had had the opportunity to develop their general writing abilities – including their ability to structure writing at the text level – and that I would therefore be free to concentrate on other things in my teaching. However, evidence in the classroom indicated that students needed additional help.

This section has looked at only one feature of text-level writing, but there are others. For example, there are many instances where writers convey complex argument structure to their readers by using metadiscourse – a kind of “second voice” in the text that rises above the discussion of content to comment briefly on the organization of form[8]. Although space constraints prevent the provision of examples of metadiscourse, it must nonetheless be pointed out that it is a feature of text-level writing that posed a problem for students in the course.

4. Building a Rubric

To summarize, observations in the classroom suggested that, overall, translation students

Have relatively effective strategies for finding and using scientific terminology, but they need to integrate these strategies into a larger ability to deal with lexical items generally;

Have relatively effective strategies for learning scientific concepts, but they need to integrate these strategies into a larger ability to deal with conceptual information more generally;

Have reasonably developed skills to write grammatical sentences;

Have difficulty structuring the flow of information to create effective paragraphs; and

Are not accustomed to shaping their writing into text structures that function within a given context.

Together, these five statements provided a good understanding of student competencies. They thoroughly described the skills and knowledge that students need to develop in order to translate scientific texts effectively.

Still, up to this point in the course, this information had not suggested a clear means for solving the original problem – arriving at individual student grades. What was needed at this juncture was a way to turn this understanding into a system for evaluating student work. It was necessary to find a tool that could accommodate the competencies that were identified as important, and that could incorporate observations of student translation to categorize different levels of achievement. What was needed was a rubric.

4.1 Choosing the Type of Rubric

In their book on scoring rubrics, Arter and McTighe (2001) identify three basic considerations to bear in mind when designing a rubric: whether the rubric will be holistic or analytical; whether the rubric will be generic or task-specific; and how many levels of achievement the rubric will describe. Their discussion of these considerations is summarized below.

-

Holistic versus Analytical

A holistic rubric provides a single rating for an entire student performance, and that rating is based on an overall impression of the student’s work. Holistic rubrics are good tools for rating simple performances, and they provide an overall snapshot of the quality and effect of those performances. They tend to be used in large-scale assessments. However, they do not provide a detailed analysis of a student’s strengths and weaknesses, and they are not useful as diagnostic tools. In contrast, an analytical rubric divides a student performance into essential dimensions that can be judged separately. Analytical rubrics are good tools for evaluating complex performances, and for giving specific feedback. They tend to be used in the classroom. However, it is often time-consuming to learn and apply analytical rubrics.

-

Generic versus Task-Specific

A generic rubric is one that is designed to be used to assess a number of similar performances. It is appropriate to use when students must demonstrate the same complex skills across a number of tasks. Generic rubrics are helpful in teaching students about the general nature of quality, and using them means that students do not have to learn a new rubric for each new task they carry out. In contrast, a task-specific rubric is one that is written to be used with one single task. Each time students are given a new task to carry out, a new rubric must be designed to evaluate their performance. Task-specific rubrics are easier and faster to use, and they generally provide more consistent scores. They tend to be used in large-scale or high-stakes assessment, and they are good for evaluating particular facts or methods.

-

The Number of Levels of Achievement

Logic would dictate that a rubric needs to describe at least two levels of achievement, satisfactory and unsatisfactory. However, it is often recommended that a rubric have a minimum of four levels, so that it can adequately describe a range of behaviours and indicate to students what they need to do to make progress. It is also recommended that the rubric not have more than eight levels, because beyond this point, it becomes difficult for teachers and students alike to distinguish clearly among them.

It seemed most appropriate to work with an analytical rubric, for several reasons. First, experience in the classroom had shown that translating scientific texts is a complex task. There are a number of competencies required to translate scientific texts effectively, and that these competencies are separate constructs. For example, there were often cases where one student had a high level of achievement writing at the sentence level, but a low level of achievement writing at the paragraph level, while the achievements of another student were reversed. Second, Arter and McTighe point out that analytical rubrics are appropriate classroom tools and that they are useful for diagnosing individual strengths and weaknesses. Third, it seemed clear that if enough classroom time were devoted to the rubric, students could learn to use the rubric effectively not only to understand the way they were being evaluated, but also to help guide their learning.

Similarly, there were compelling reasons to work with a generic rubric. First, what was important for students to learn was not the specifics of the individual texts they worked on, but rather the general abilities they were developing in the course of the work. In fact, it was unreasonable to expect that students would be called upon in their professional lives to translate texts on the topics examined in class (HAART, and the neuropsychology of bilingualism). Instead, the goal in choosing these topics was to foster within the students the skills to approach a new topic area and bring themselves up to speed quickly. Second, by using a generic rubric, students only needed to become accustomed to one such instrument.

It was less straightforward to determine the third point – the number of levels of achievement the rubric would describe. In the literature on rubrics, scholars often made use of a taxonomy of learning to categorize achievement (for one example, see Suzuki, 1997). Therefore, a specific taxonomy was selected, and the rest of the rubric was constructed around it.

4.2 Integrating a Taxonomy

The particular instrument chosen was the Structure of Observed Learning Outcomes (SOLO) Taxonomy (Biggs and Collis 1982). SOLO was in part inspired by Piagetian theories of learning. But where such theories focus on students’ stages of development, SOLO examines student responses. More precisely, it describes the quality of a particular student performance, at a particular time. And SOLO suggests that the quality of a performance can best be determined by looking at its structure.

The SOLO taxonomy describes the structure of student performance at five levels, each of which is outlined briefly below.

-

Prestructural

At this level, students display little evidence of genuine learning. They may miss the point of a pedagogical exercise, or they may use denial to get around their lack of understanding. Students may also respond with a tautology, i.e., with information from the “cue” (the question or assignment as provided by the instructor).

-

Unistructural

Students are only able to use one element of a larger task or activity, and they fail to see and use other important elements. They are on the right track, but they need to deepen their understanding of the task at hand. Their response is formed with information that may come from the cue and only one other pertinent datum.

-

Multistructural

At this level, students are able to use several elements of a larger task or activity, but they are not able to connect them in meaningful ways. They see the task as a list of facts with no understanding of significance or context. A typical response uses information from the cue and several pertinent data, but the data are not arranged in ways that show relationships among them (i.e., ideas are expressed in the form of “and…,” “and so…,” “and also…”).

-

Relational

Students are able to integrate elements of the task into a system appropriate for the situation at hand. There is evidence that they understand the broader context behind the task at hand. They can generalize within a given or experienced situation, and their responses are constructed with information from the cue, pertinent data, and their understanding of the interrelationships among them.

-

Extended Abstract

At this level, students are able to understand the task in a way that goes beyond the situation at hand. They are able to generalize to situations they have not experienced, or to conceptualize at a more abstract level. In other words, they encode not only given information, but they deduce a hypothesis and apply it to a situation that is not given.

4.3 Constructing the Rubric

To construct the actual rubric, the three sources of information discussed so far in this article were used. First, the principles described by Arter and McTighe (2001) allowed the construction of the basic form. I decided the rubric would be analytical. As a result, it took the form of a grid, with each square describing a particular student competency, at a particular level of achievement. Also, because the goal was to construct a generic rubric, each description would remain general, and would not be keyed to the specifics of a particular translation assignment. Second, assumptions about student ability – after these assumptions were revised on the basis of classroom observation – provided a list of competencies. These competencies were used to form the rows in the rubric grid. Third, the levels of the SOLO taxonomy were used to form the columns of the grid, and the taxonomy’s ideas about making an evaluation on the basis of a response’s structure informed the descriptions in each square of the grid. The final scoring rubric is presented in Figure 8 below.

Figure 8

Level One best describes a student performance if the translation suggests that the student has learned little about the competency in question. For example, if the student paragraph on positron emission tomography that was sampled earlier in Figure 5 were representative of the translation as a whole, then the student might earn a Level One for paragraph-level writing. The work is grammatical, and it uses terms and concepts correctly. However, cohesion – in the form of effective use of topic and stress positions – has been ignored.

Level Two best describes a student performance if the translation suggests that the student is able to apply learning in a competency area in an isolated way. For instance, if the student translation sampled in Figure 5 contained scattered examples of correct use of the topic and stress positions, the student would earn a Level Two for paragraph-level writing. Level Three works in a similar way, except that the instances of correct usage are not scattered; instead, they appear regularly throughout the text.

Determining whether a student translation merits a Level Four is somewhat more complex, because student achievement in the competency area has to be not only correct, but also appropriate. The distinction between correctness and appropriateness can be illustrated with examples for each of the competencies.

-

Lexical Choice

The translation of “multithérapie” by “HAART” is correct, but it isn’t appropriate if the text the student is translating will be read by the general public (who would probably be more familiar with discussion of “the cocktail”).

-

Concepts

A student would be correct if she or he mentioned in an appositive that NNRTIs are “a class of drugs used to treat HIV disease,” but this information wouldn’t be appropriate in a text read by specialists (who know full well what NNRTIs are).

-

Sentence Level

A turn of phrase may be correctly crafted with colons, semi-colons, and dashes, but if the translation is a popularization of science for a lay audience, then such complicated punctuation may work against the text’s underlying intent (i.e., to show that science can be explained simply).

-

Paragraph Level

Familiar information may be correctly placed in the topic position and unfamiliar information in the stress position, but if every single sentence in the text is written in this way, it may be very monotonous for the reader.

-

Text Level

A student may use metadiscourse to make argument structure apparent, but if metadiscourse becomes omnipresent, it may also detract from the quality of the translation.

Level Five best describes a translation if the student is able to move beyond her or his understanding of the situation at hand. In other words, if the translation is appropriate, not only for a setting the student knows (a classroom assignment), but also for one the student has not experienced (publication in the relevant field), then the student has reached the highest level of achievement.

4.4 Using the Rubric

At first glance, the scoring rubric looks like a complicated instrument. It fills a page with an array of packets of information that take some time initially to understand. But the objective in constructing the rubric was actually to simplify things, both for the students in the class and for me as the instructor. After much trial and error in the classroom, I find now that I am able to use the rubric to achieve these objectives.

To help the students understand the rubric, each competency is associated with a colour. Lexical choice is blue, concepts are orange, sentence-level writing is green, etc. Student work is also returned with a copy of the rubric stapled to the front, and the rubric has been colour-coded to show each student’s level of achievement in each competency area. For example, if a student has earned a Level Three in sentence-level writing, the appropriate square in the rubric grid is outlined in green highlighter. The student can also flip to the actual assignment and see that all of the places in the text where there are problems with grammar, spelling, and punctuation are highlighted in green. Because this is done for all five competency areas, students can identify their overall strengths and weaknesses with a quick glance at the rubric, and they can rapidly find specific instances in their work where their weaknesses are evident. Since I started using this system, I find that even the weaker students approach me to say that they understand where they went wrong, and what they have to do in order to improve the next time around.

To arrive at formal grades, each column in the rubric, and its corresponding level of achievement, is associated with a different overall mark. For simplicity’s sake, a number value has been chosen for each column that translates into a letter grade accepted by the university where the course on scientific translation was taught. In other words, Level One is equivalent to 45, a mark well below D and a clear failure; Level Two, at 65, is on the border between a C and a C+; Level Three, at 75, is on the border between a B and a B+; Level Four, at 90, is on the border between an A and an A+; and Level Five, worth 100, is a clear A+. Because students earn marks at different achievement levels, a typical set of class marks contains a range of values that represent the continuum of student performance, from failing to exceptional. Now that I grade in this way, I no longer agonize over the decision to attribute a particular mark, and students report that they find the system transparent and easy to understand.

5. Conclusion

The scoring rubric presented in this article is now the cornerstone of my curriculum. It is introduced in the beginning of the course on scientific translation, and it is used as a springboard to discuss with students both what translation is and what is expected of them when they translate. We talk about the different competency areas, I provide them with examples of various levels of achievement in each area, and we analyze the different types of evidence for each competency. For example, a discussion of sentence-level writing leads to a review of the rules of grammar and punctuation that students are less familiar with, like subject-verb agreement with collective nouns, or the use of the semi-colon with conjunctive adverbs. A discussion of paragraph-level writing provides a segue to a lesson on the effective use of transitions, of subject-verb distance, and of the topic and stress positions in a sentence.

Before giving out an assignment or test, I review the rubric with my students, and we examine the strategies that they can use to succeed. If an assignment requires students to translate a text for a popular science magazine, the discussion might focus, for instance, on the lexical and conceptual features of a translation that are appropriate for lay readers. Conversely, if a test asks students to translate a text that might appear in a scholarly journal, the discussion might explore the textual and cohesive features that are important for specialist readers.

When returning an assignment or test, I make reference to the rubric once again. Students are shown examples of common problems they encountered in their translations, and together we categorize these problems according to the relevant competencies and levels of achievement. Students are also shown examples of translations where the problems were handled successfully, so that they can devise strategies for success the next time around.

In short, the rubric forms the basis for translation pedagogy and for the students’ learning. I feel more confident assigning grades to student work, and students see a grading system that is clear, fair, and that helps them learn.

It is possible that this discussion of the development of the scoring rubric may catch some readers off guard. After all, a scholarly journal is normally the place readers turn to in order to read about an author’s expertise, and yet this article has described a neophyte’s first attempt at teaching and grading scientific translation. However, discussions like this one are vital because of the current demographics among translation instructors. There is a changing of the guard, as older, more experienced professors reach retirement and younger, greener teachers step up to the plate. If translation programs are to deal with this change effectively, there must be opportunities for new teachers to consider the ways in which they can add to their content knowledge by developing their abilities as teachers. One way to do this is to move beyond the notion of intuitive grading – even though it may be based on very well-honed professional judgement – and think in a conscious manner about the path that leads from the first assessment of student work to the choice of an actual grade. The rubric presented here can help in this regard, by turning student evaluation into a more objective and transparent process, and by using that process to shape classroom learning on a more global level.

Appendices

Notes

-

[1]

The duration of secondary school studies in the Canadian province of Ontario was recently changed from five years to four. The year this change was put in place, the number of graduating students doubled. This oversized group, made up of the last students to finish under the five-year system and the first to finish under the four-year one, became known as the double cohort. As they now make their way through Canadian post-secondary institutions, they are placing an increased demand on services and facilities.

-

[2]

Spencer and Spencer (1993) argue that there are five different types of competencies: motives, traits, self-concept, skills, and knowledge. I chose to work with the last two because they are visible, surface characteristics, and because they are easier to develop through training than the other types of competencies.

-

[3]

For example, Goujard (2002) reports on a study that compared the effectiveness of a drug regimen comprising two NRTIs and one PI with that of a regimen made up of two NRTIs and one NNRTI.

-

[4]

I include my assumptions about sentence-level writing under the general heading of “surprises,” because it is surprising that my thinking in this area was correct! As the reader will see, this is the only one of my initial assumptions that proved to be accurate.

-

[5]

Setton uses his model to discuss conference interpreting. However, some parts of the model – notably the interpretation of inference presented here – can be accurately applied to translation as well.

-

[6]

Interestingly, the word “if” in English can also serve the same function. In the sentence, “if he was a good governor, he was a better President,” the word also sets up a concession, and it implies a certain contrast. However, the concessional “if” seems to be used only rarely in English. Most of the time “if” is conditional. The distinction between the conditional and concessional can be difficult to teach if students’ experience with language has not exposed them to both uses.

-

[7]

For example, when commemorating the first World AIDS Day in 1988, the UK’s Princess Anne made an unfortunate deviation from her planned speech to talk about “innocent victims.” See Berridge (1996).

-

[8]

For a discussion of metadiscourse, and for an examination of its high frequency in English-language scholarly writing, see Swales and Feak (2000).

References

- Arter, J.A. and J. McTighe (2001): Scoring Rubrics in the Classroom: Using Performance Criteria for Assessing and Improving Student Performance, Thousand Oaks, Corwin Press.

- Bergenholtz, H. and S. Tarp (eds.) (1995): Manual of Specialized Lexicography, Amsterdam, John Benjamins.

- Berridge, V. (1996): AIDS in the UK: The Making of Policy, 1981-1994, Oxford, Oxford University Press.

- Biggs, J.B. and K.F. Collis (1982): Evaluating the Quality of Learning: The SOLO Taxonomy(Structure of the Observed Learning Outcomes), New York, Academic Press.

- Dancette, J. (1997): “Mapping Meaning and Comprehension in Translation: Theoretical and Experimental Issues,” in Danks, J.H., Shreve, G.M., Fountain, S.B. and M.K. McBeath (eds.), Cognitive Processes in Translation and Interpreting, Thousand Oaks, Sage.

- Foot, D. (1998): Boom, Bust & Echo 2000, Toronto, Macfarlane, Walter and Ross.

- Foot, D. (2001): “Canadian Education: Demographic Change and Future Challenges,” Canadian Education 4-1, pp. 24-7.

- Foot, D. (September 10, 2003): “Baby Boom Meets Baby Bust,” The Globe and Mail, p. A23.

- Gopen, G.D. and J.A. Swan (1990): “The Science of Scientific Writing,” American Scientist 78-6, pp. 550-558.

- Goujard, C. (2002): “Reconstitution immunitaire sous antiprotéases ou inhibiteurs non nucléosidiques,” Transcriptase 102, pp. 10-12.

- Lederer, M. (1981): La traduction simultanée: Expérience et théorie, Paris, Lettres modernes Minard.

- Michel, M. (1995): “Le virus du SIDA,” Science & Vie, numéro hors série, 193, p. 99.Setton, R. (1999): Simultaneous Interpretation: A Cognitive-Pragmatic Analysis, Amsterdam, John Benjamins.

- Spencer, B.G. (2001): “Student Enrolment and Faculty Recruitment in Ontario: The Double Cohort, the Baby Boom Echo, and the Aging of University Faculty, QSEP Research Report No. 365, available at the website of the Research Institute for Quantitative Studies in Economics and Population http://socserv.mcmaster.ca/qsep/title01.htm .

- Spencer, L.M. Jr. and S.M. Spencer (1993): Competence at Work: Models for Superior Performance, New York, John Wiley and Sons.

- Swales, J.M. and C.B. Feak (2000): English in Today’s Research World: A writing guide, Ann Arbor, University of Michigan Press.

- Suzuki, K. (1997): Cognitive Constructs Measured in Word Problems: A comparison of students’ responses in performance-based tasks and multiple-choice tasks for reasoning, paper presented at the Annual Meeting of the American Educational Research Association, Chicago.

- Viard, J-P. (2003): “d4T et risque de lipoatrophie,” Transcriptase 107.

- Vincent, C. (1999): “Le cerveau des sourds entend le langage des signes,” Le Monde (17 février 1999), p. 22.

- Williams, J.M. (1981): Style: Ten Lessons in Clarity and Grace, Glenview, Foresman and Company.

List of tables

Figure 1

Examples of Cognate Terms

Figure 2

Examples of Non-Cognate Terms

Figure 3

Examples of Student-Identified “Terms”

Figure 4

Student Translation Showing Difficulty with General Concepts

Figure 5

Student Translation Showing Difficulty with Paragraph Structure

Figure 6

Student Translation Showing Difficulty with Interpretation of Inference

Figure 8